Shih-Lun Wu1,2* Chris Donahue1 Shinji Watanabe1 Nicholas J. Bryan2

1School of Computer Science, Carnegie Mellon University

2Adobe Research

*Work done during an internship at Adobe Research

Abstract

Text-to-music generation models are now capable of generating high-quality music audio in broad styles. However, text control is primarily suitable for the manipulation of global musical attributes like genre, mood, and tempo, and is less suitable for precise control over time-varying attributes such as the positions of beats in time or the changing dynamics of the music. We propose Music ControlNet, a diffusion-based music generation model that offers multiple precise, time-varying controls over generated audio. To imbue text-to-music models with time-varying control, we propose an approach analogous to pixel-wise control of the image-domain ControlNet method. Specifically, we extract controls from training audio yielding paired data, and fine-tune a diffusion-based conditional generative model over audio spectrograms given melody, dynamics, and rhythm controls. While the image-domain Uni-ControlNet method already allows generation with any subset of controls, we devise a new strategy to allow creators to input controls that are only partially specified in time. We evaluate both on controls extracted from audio and controls we expect creators to provide, demonstrating that we can generate realistic music that corresponds to control inputs in both settings. While few comparable music generation models exist, we benchmark against MusicGen, a recent model that accepts text and melody input, and show that our model generates music that is 49% more faithful to input melodies despite having 35x fewer parameters, training on 11x less data, and enabling two additional forms of time-varying control.

Bibtex

@article{Wu2023MusicControlNet,

title={Music ControlNet: Multiple Time-varying Controls for Music Generation},

author={Wu, Shih-Lun and Donahue, Chris and Watanabe, Shinji and Bryan, Nicholas J.},

year={2023},

eprint={TBD},

archivePrefix={arXiv},

primaryClass={cs.SD}

}

Examples (Cherry-picked)

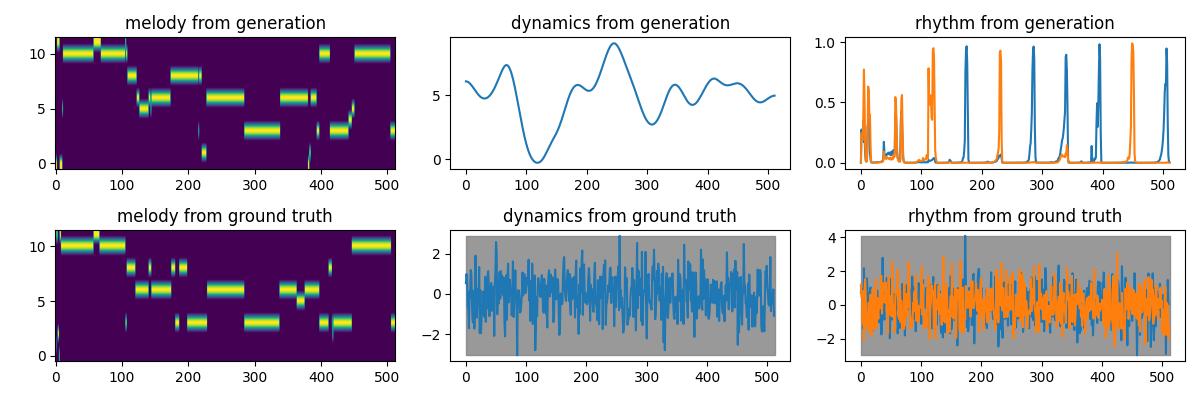

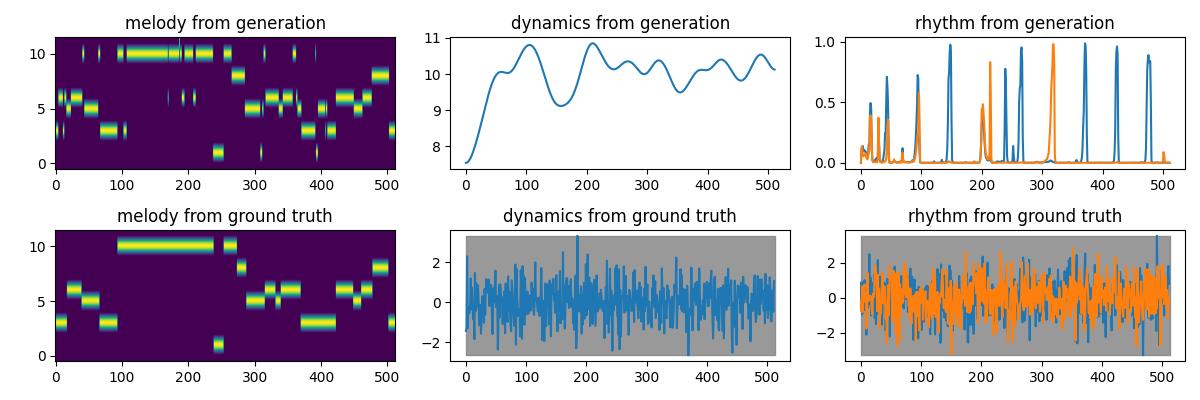

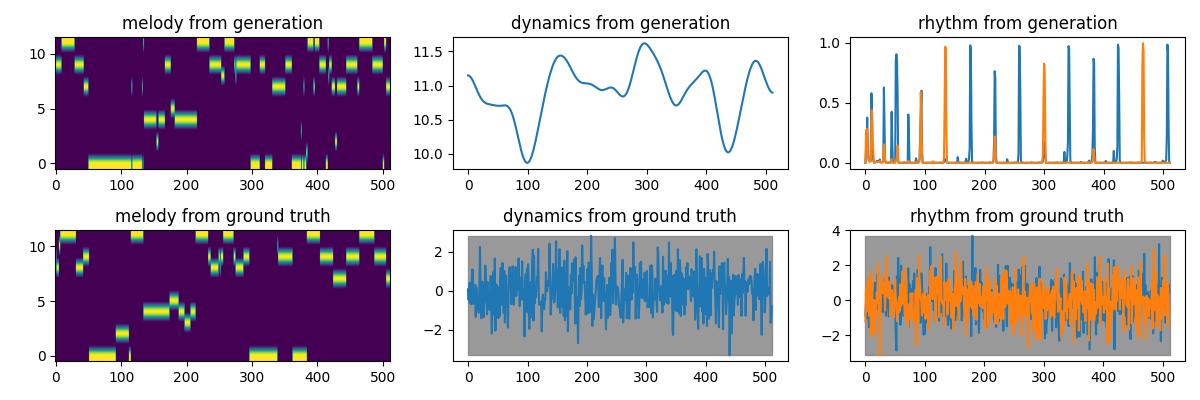

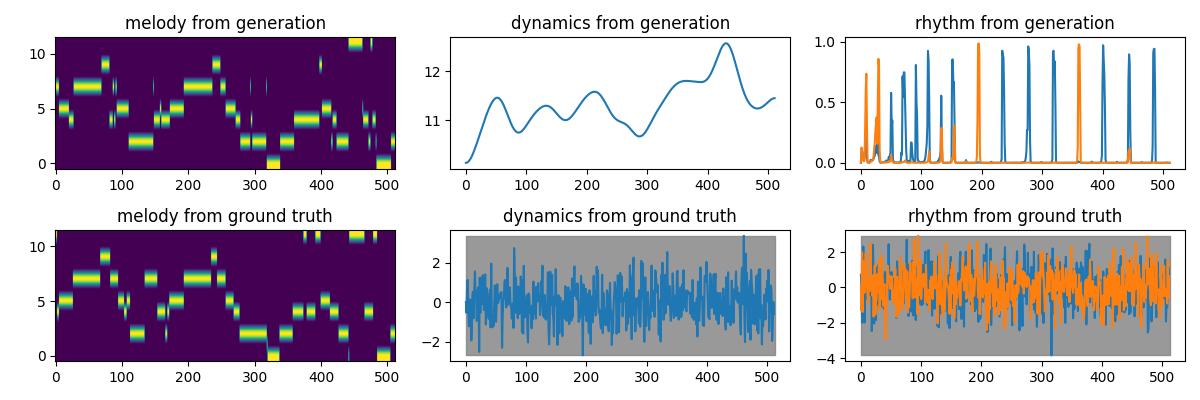

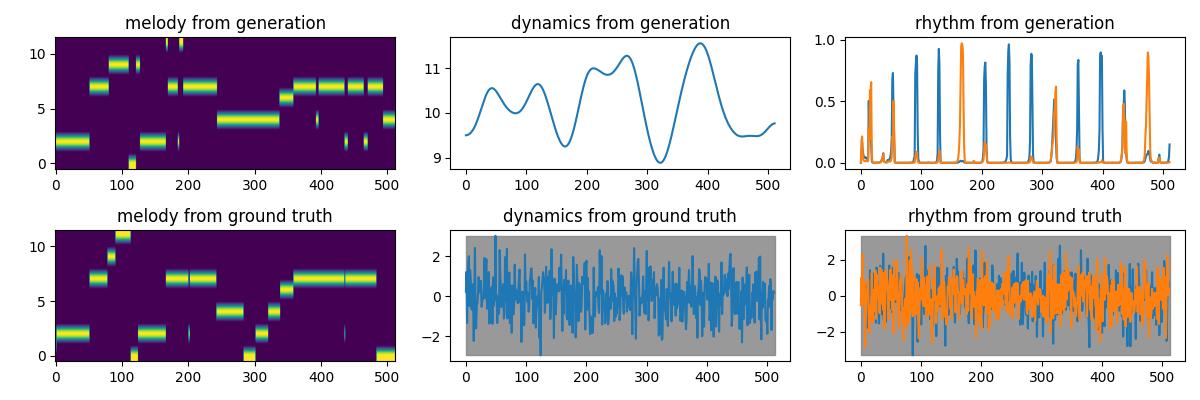

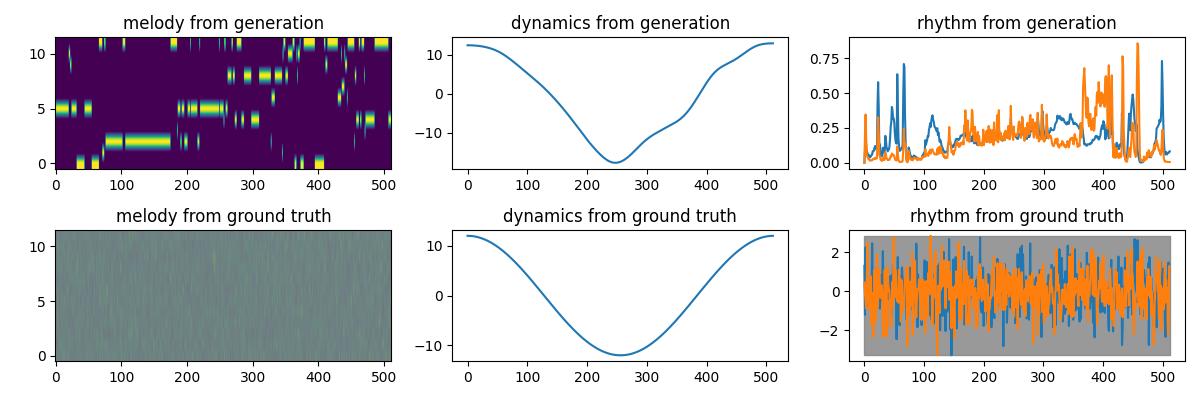

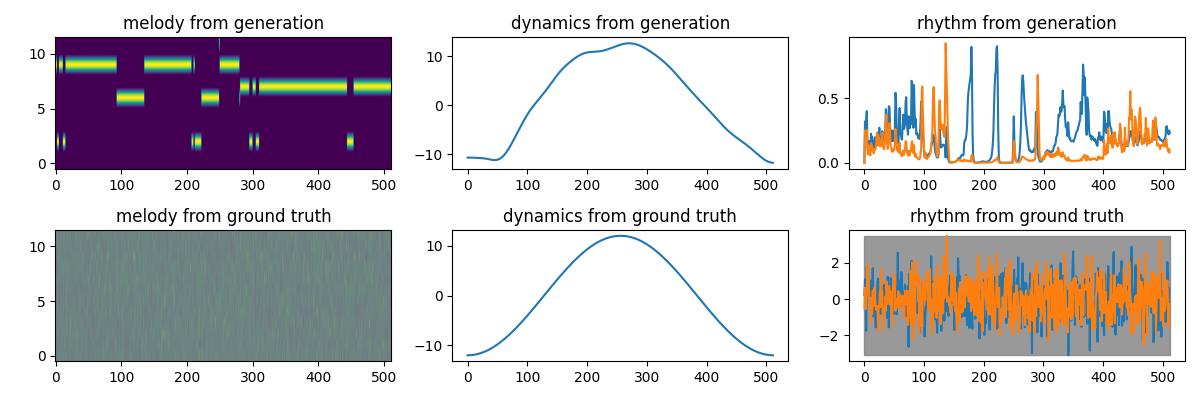

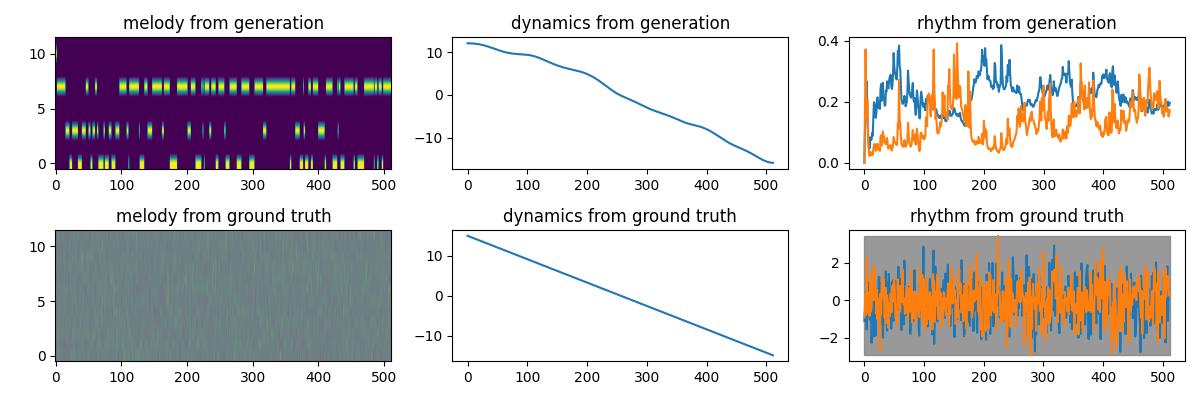

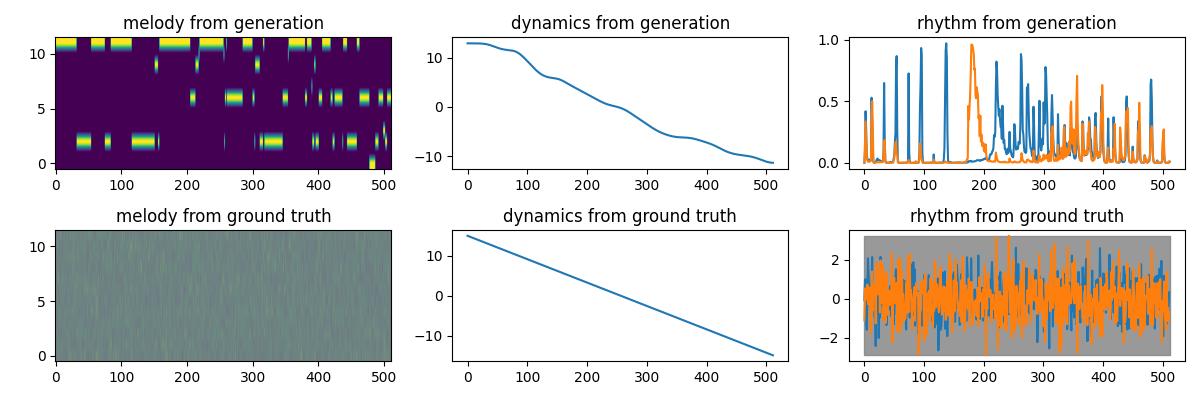

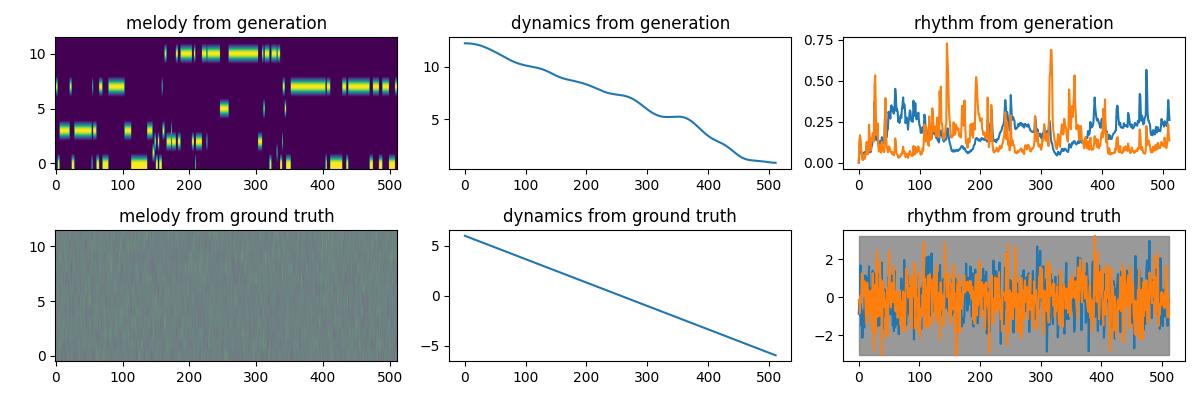

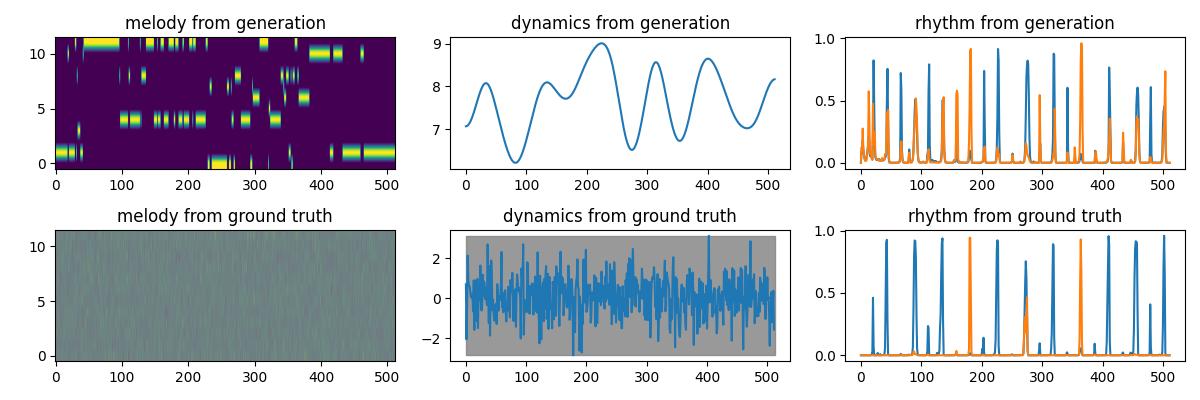

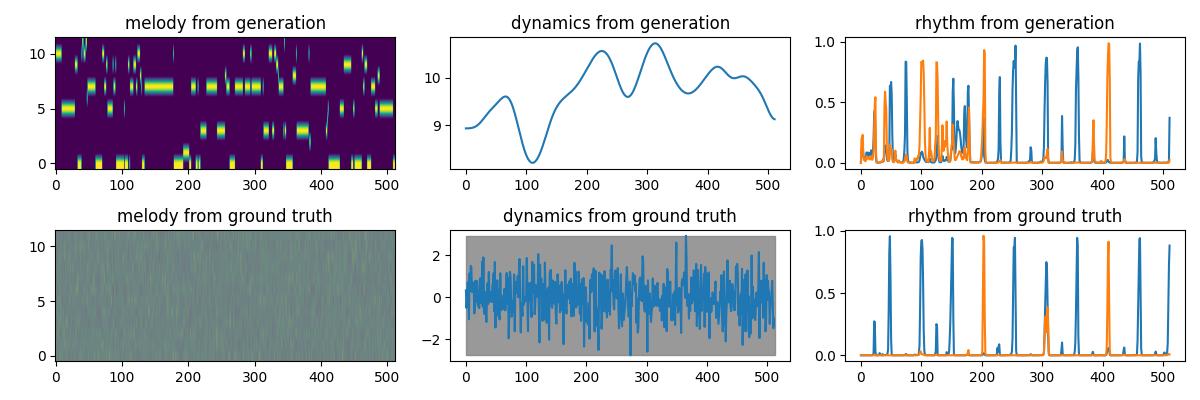

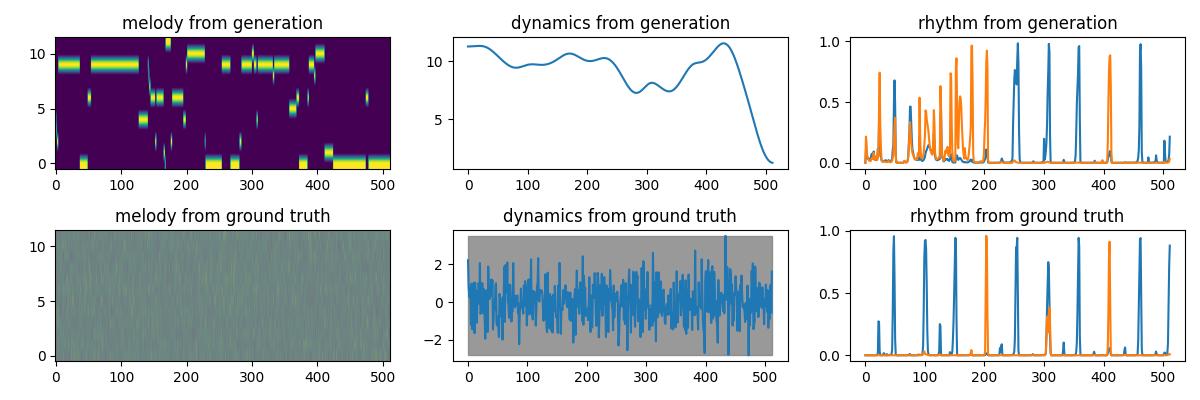

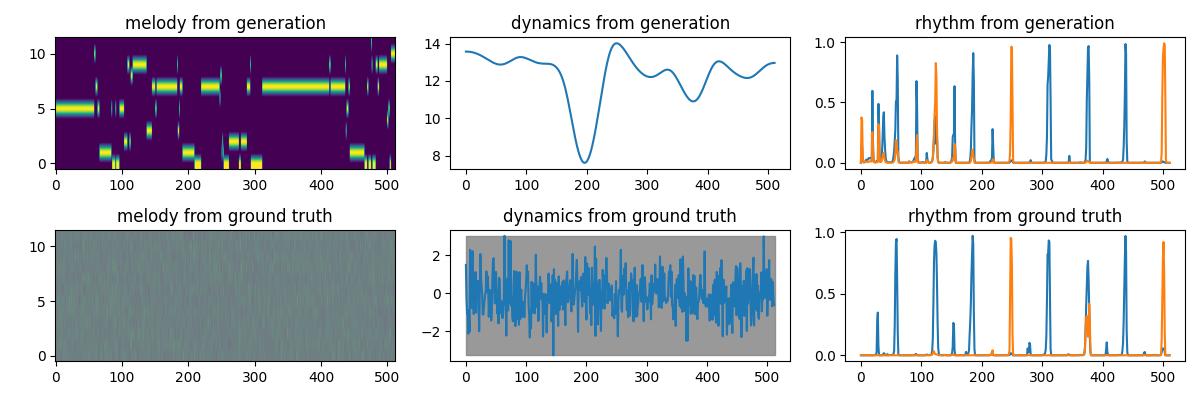

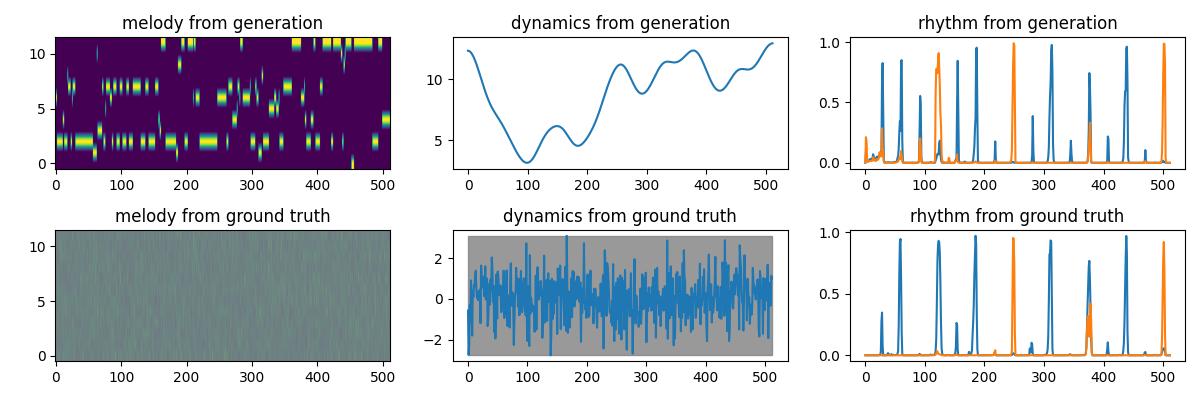

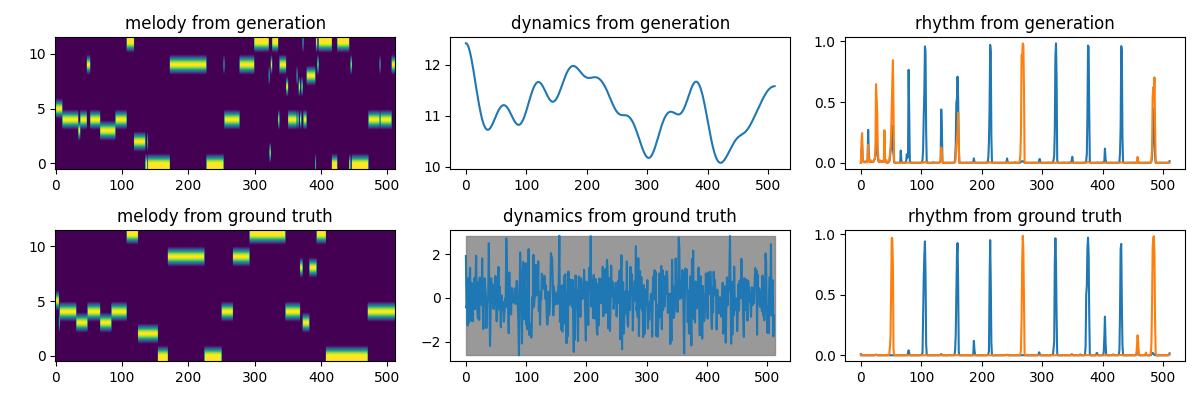

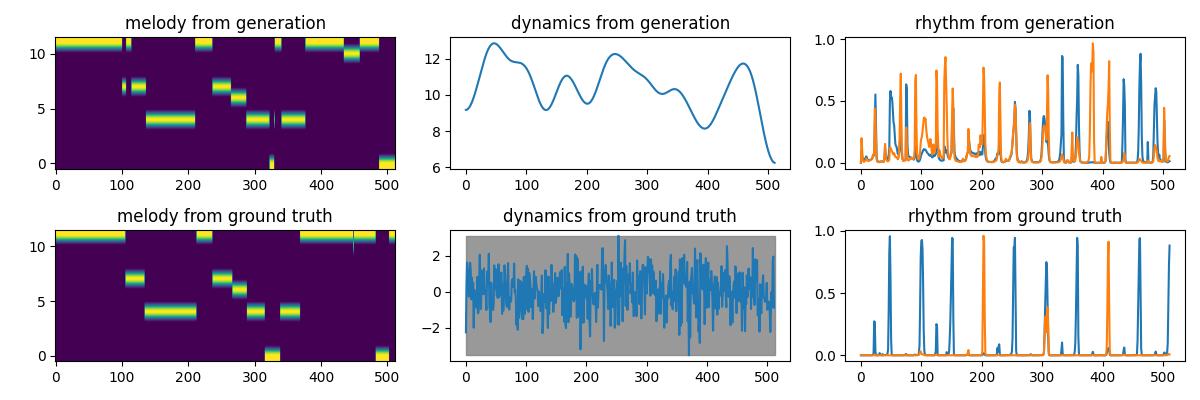

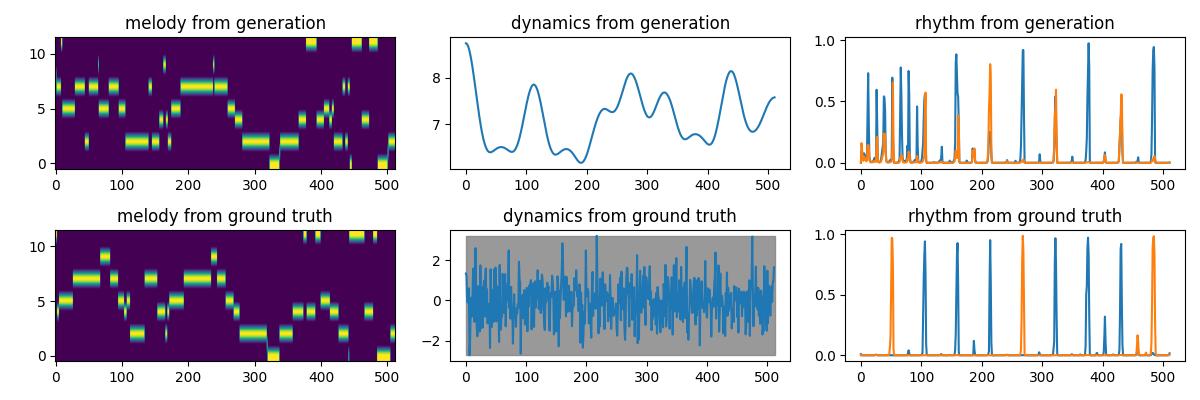

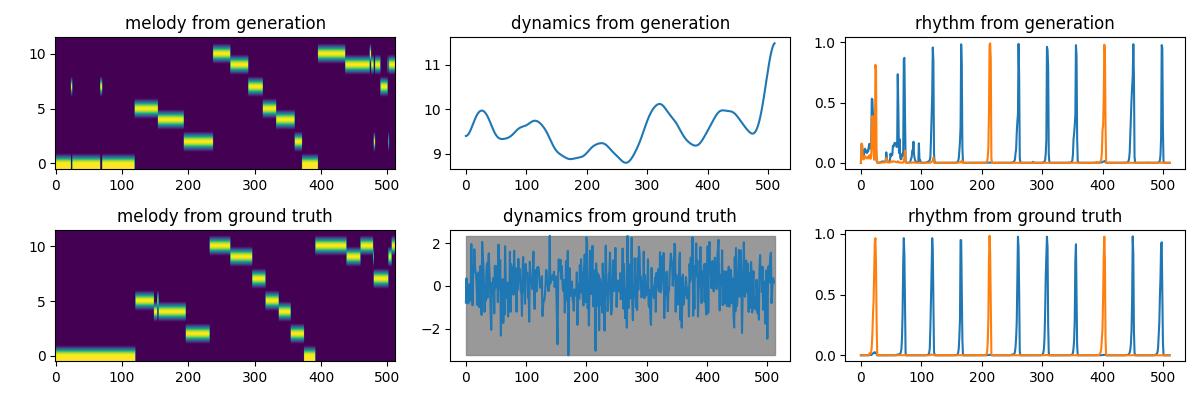

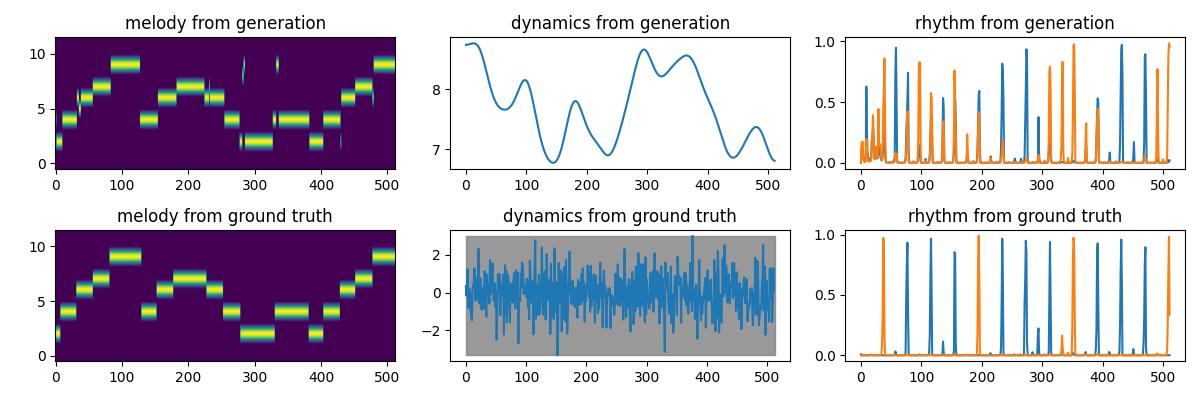

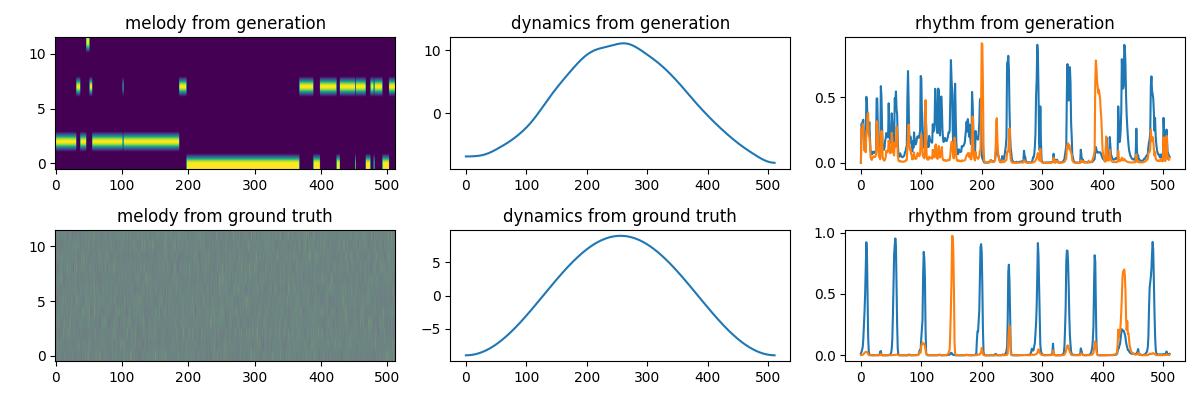

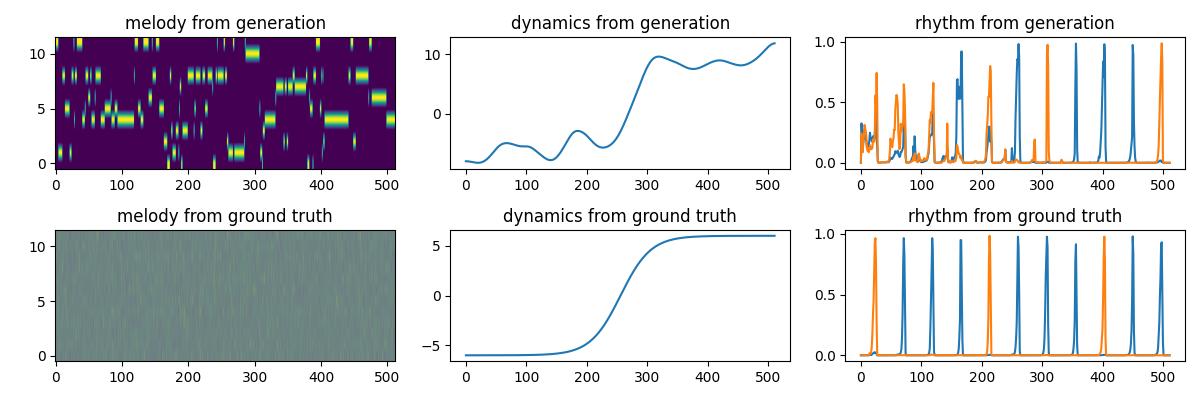

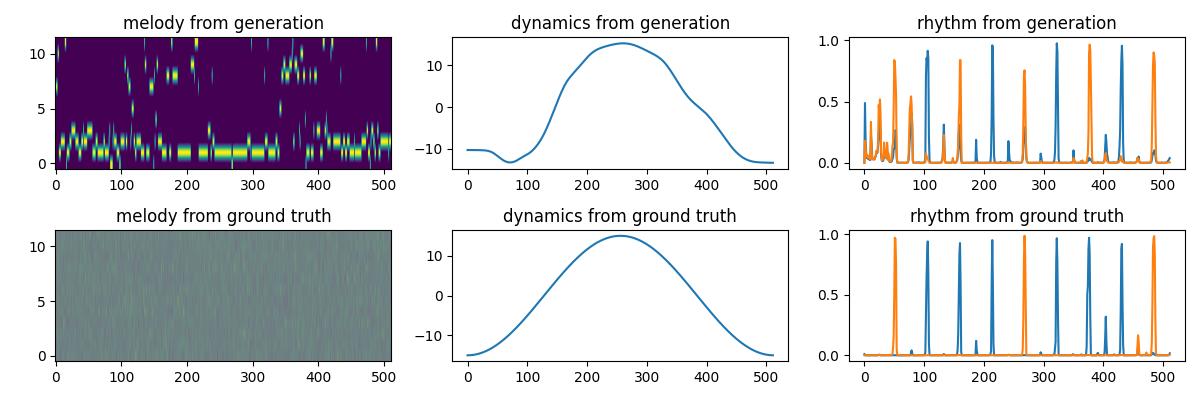

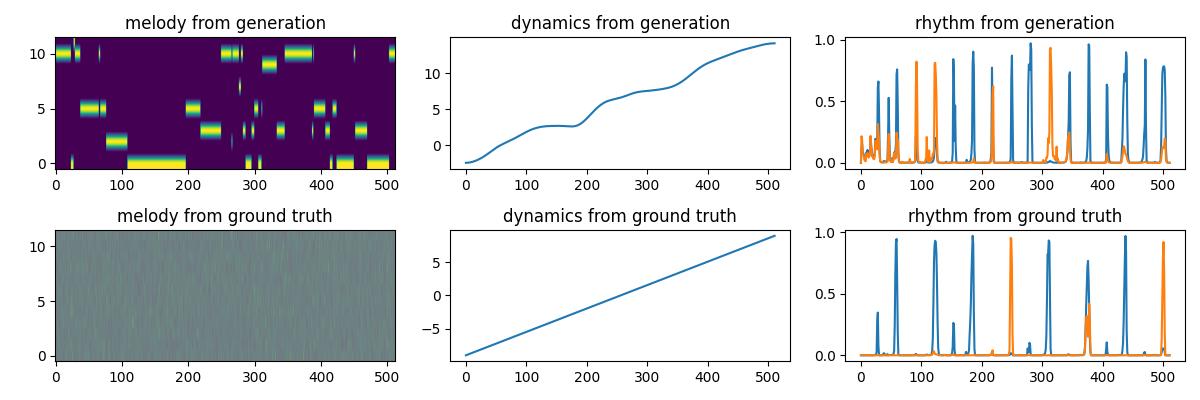

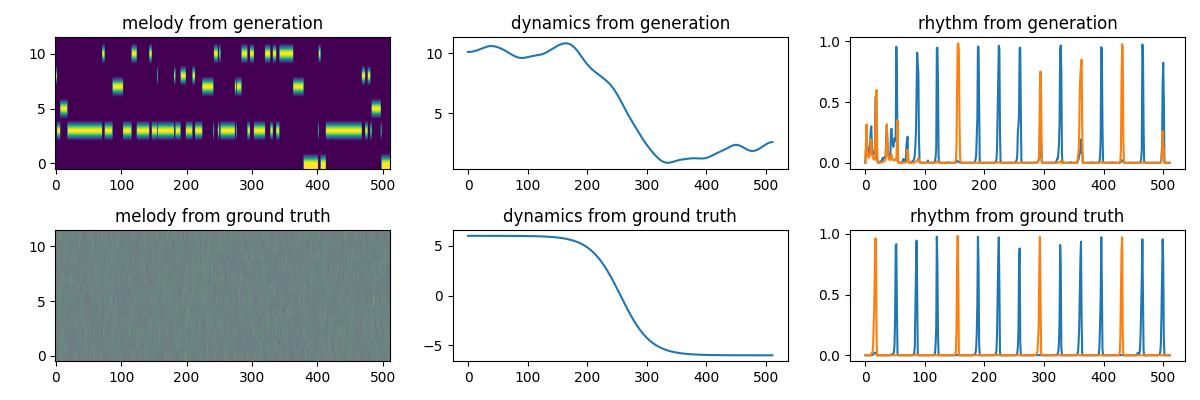

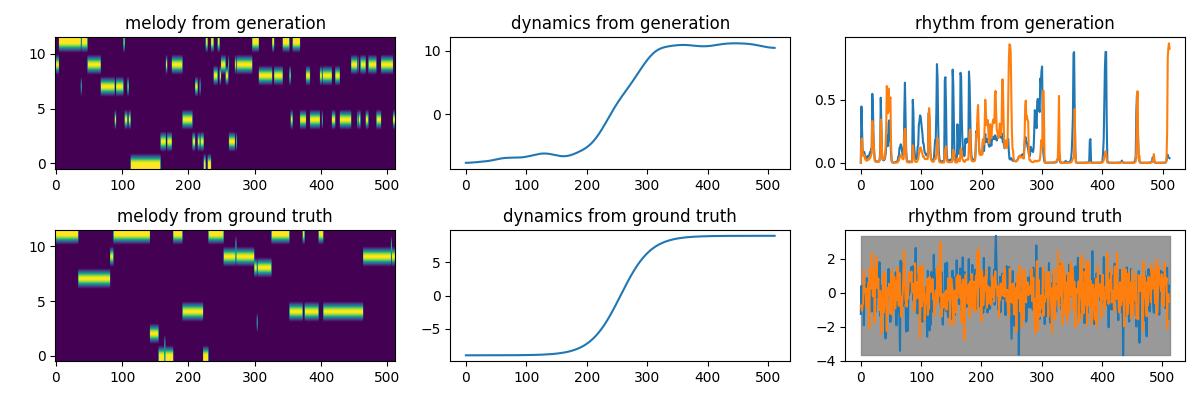

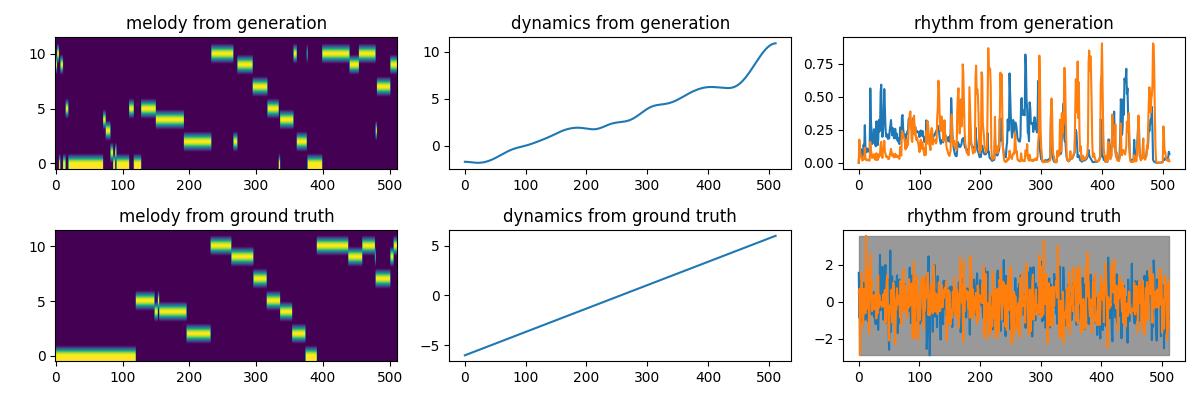

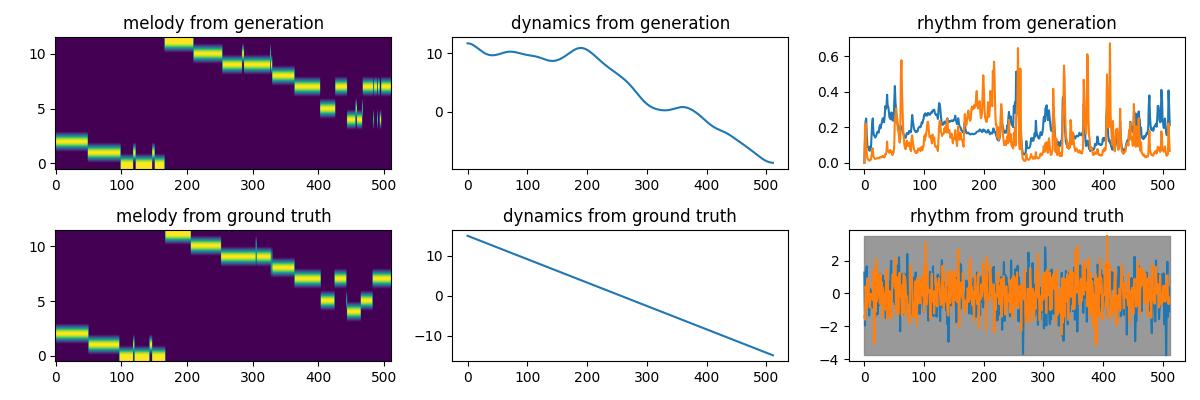

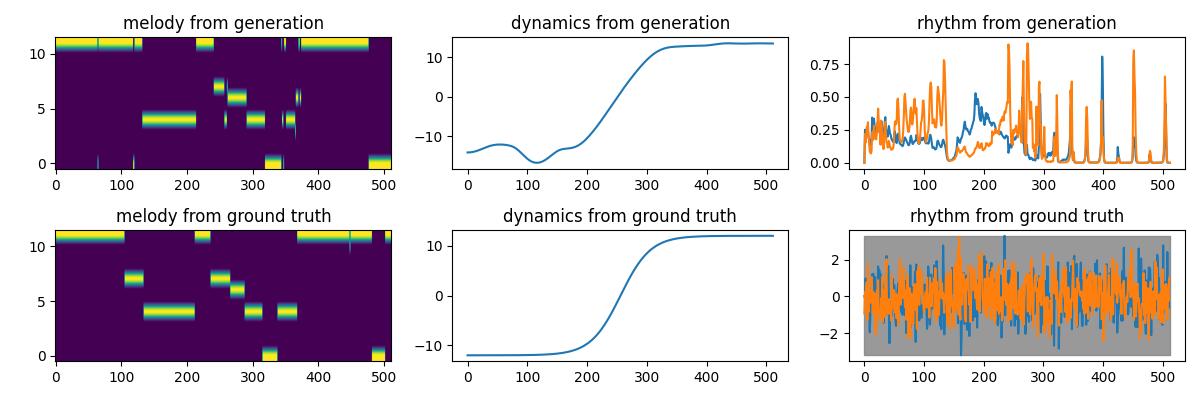

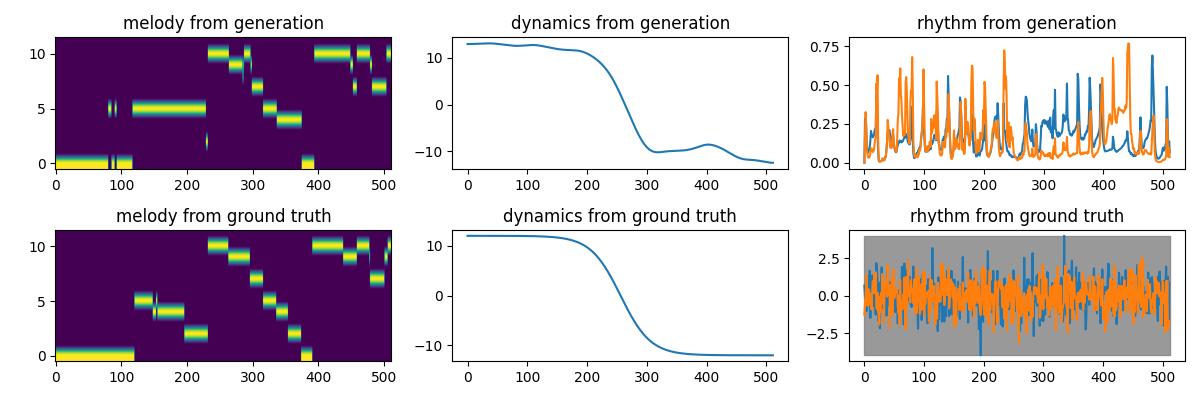

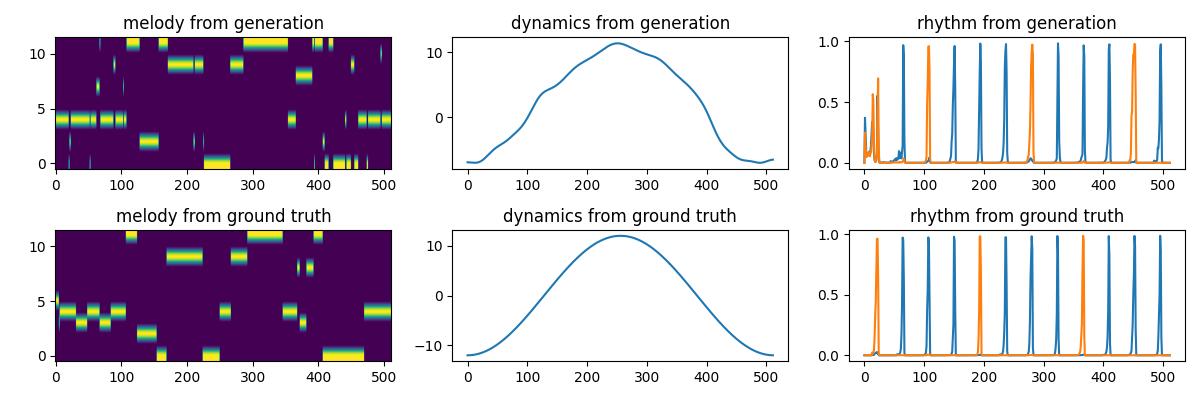

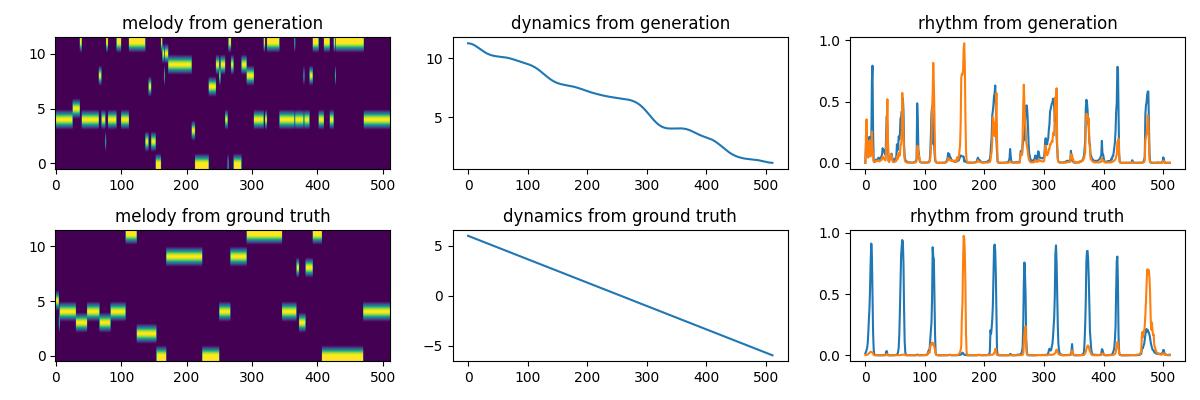

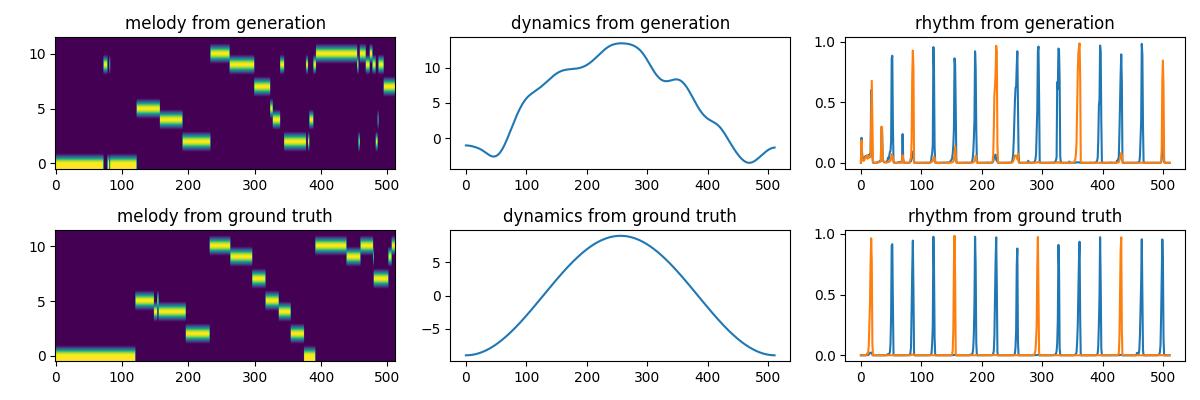

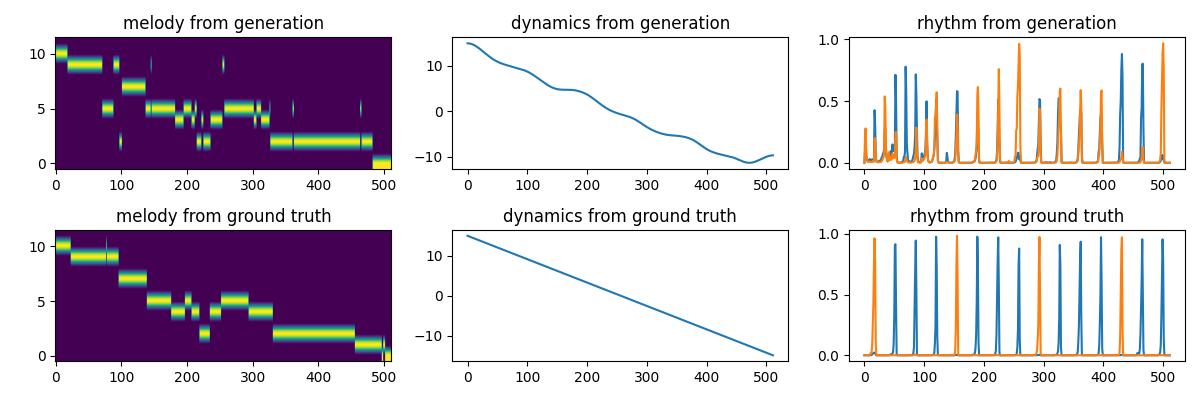

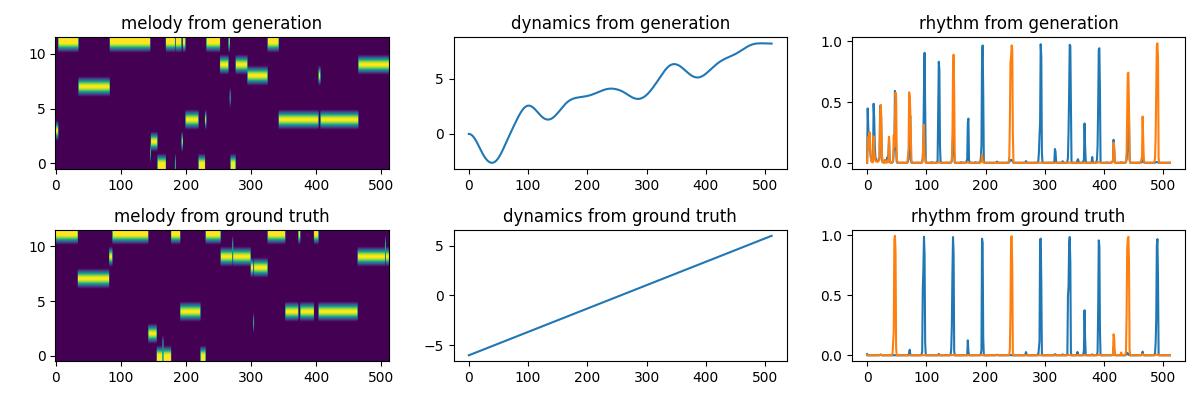

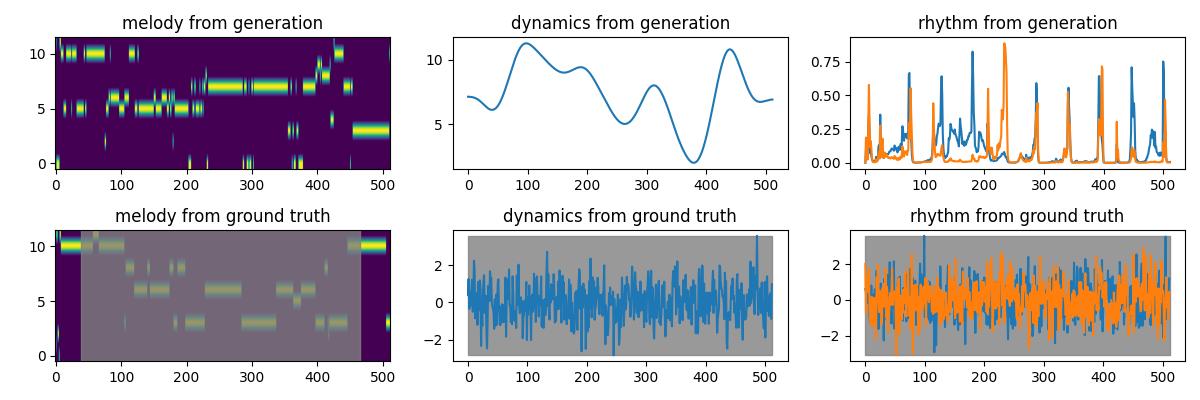

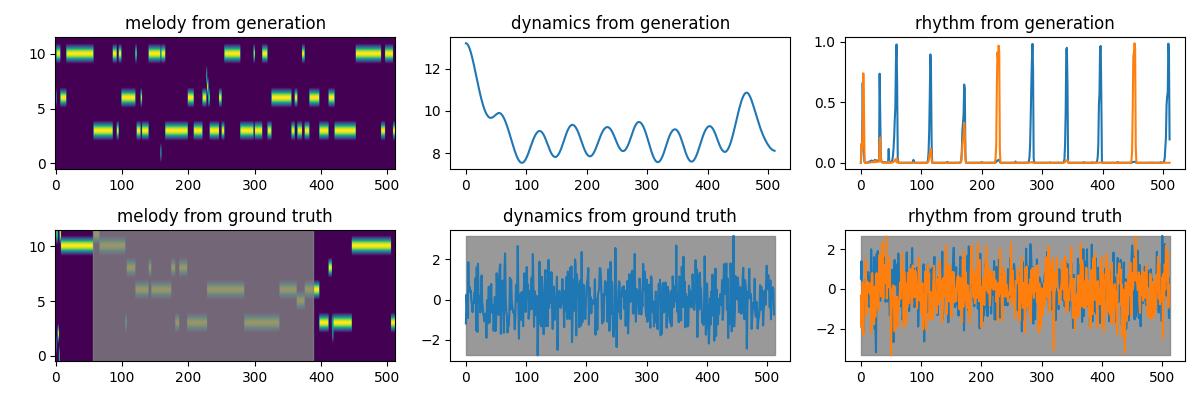

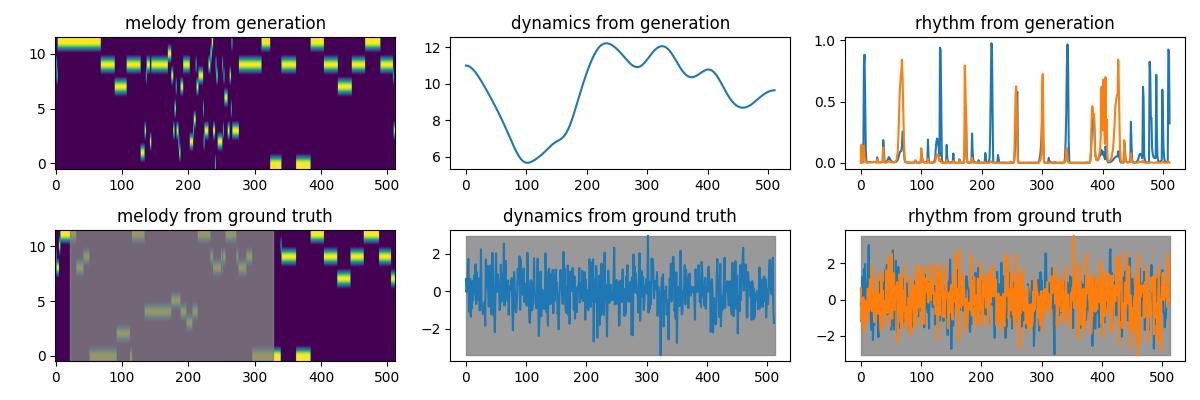

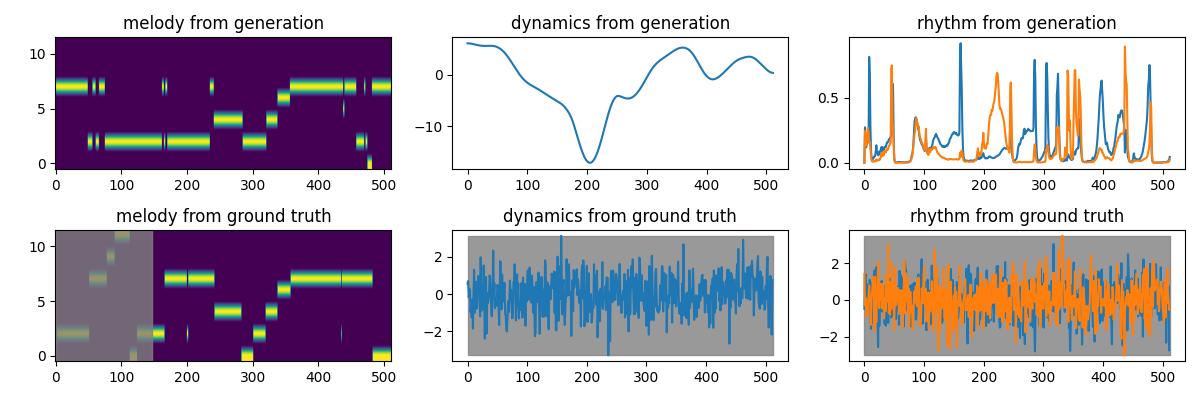

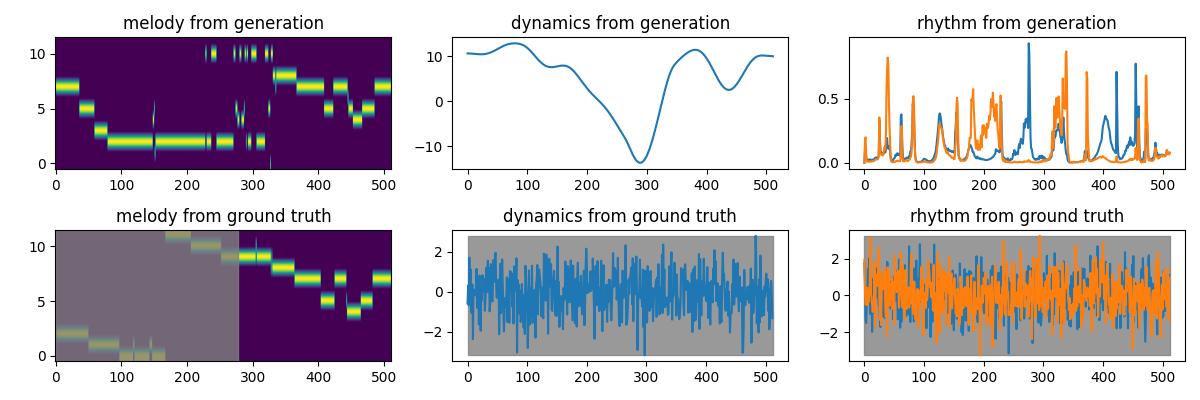

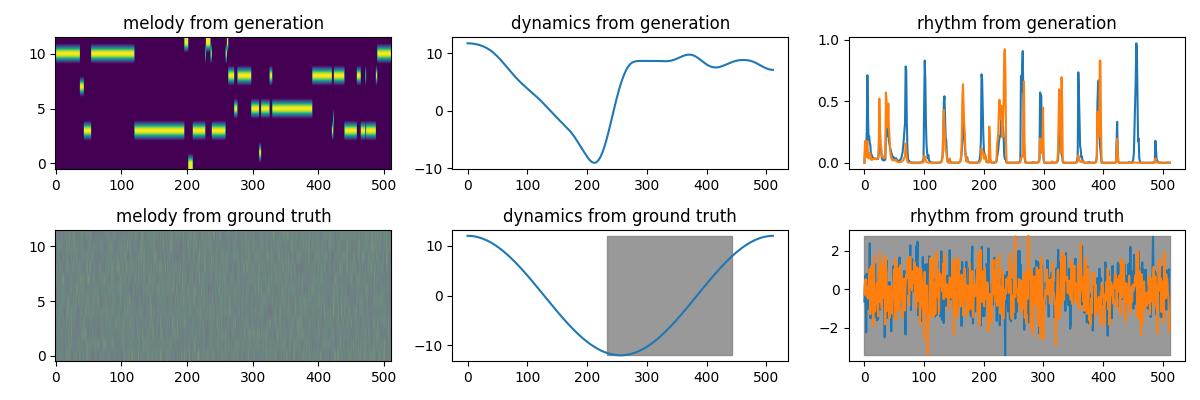

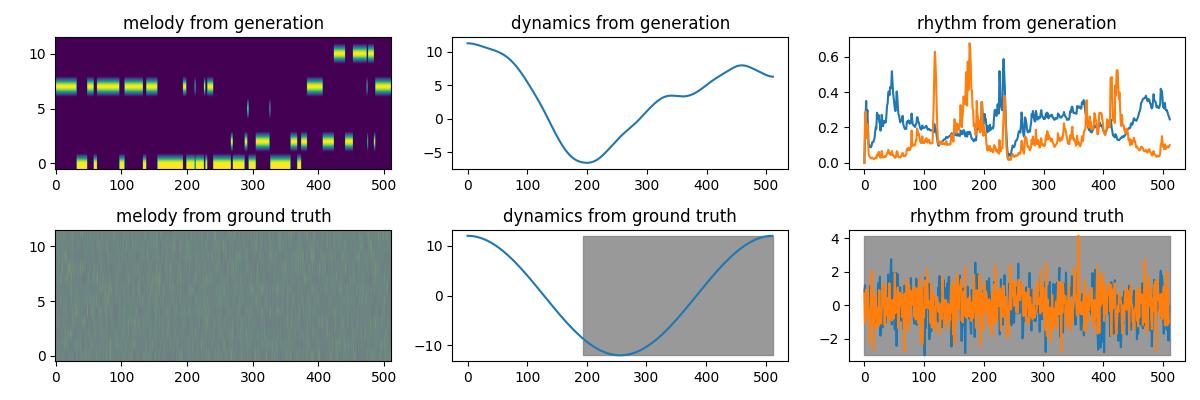

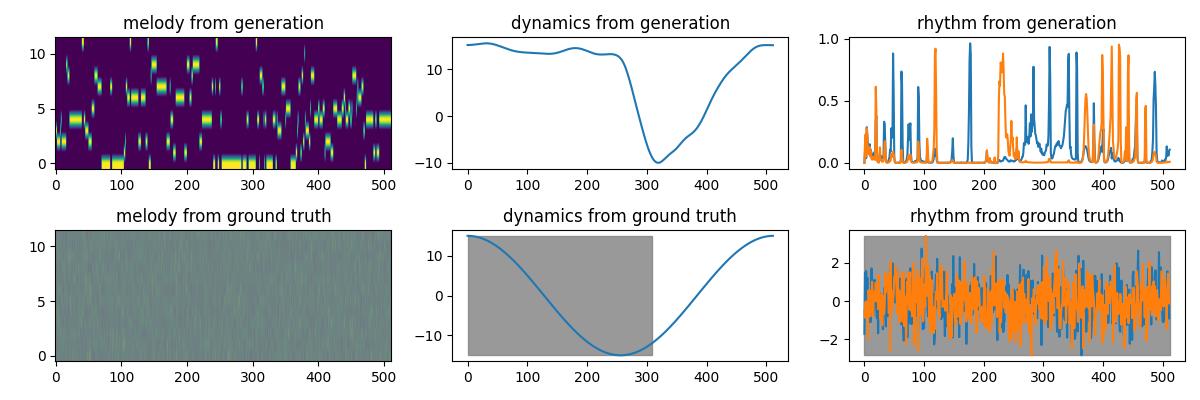

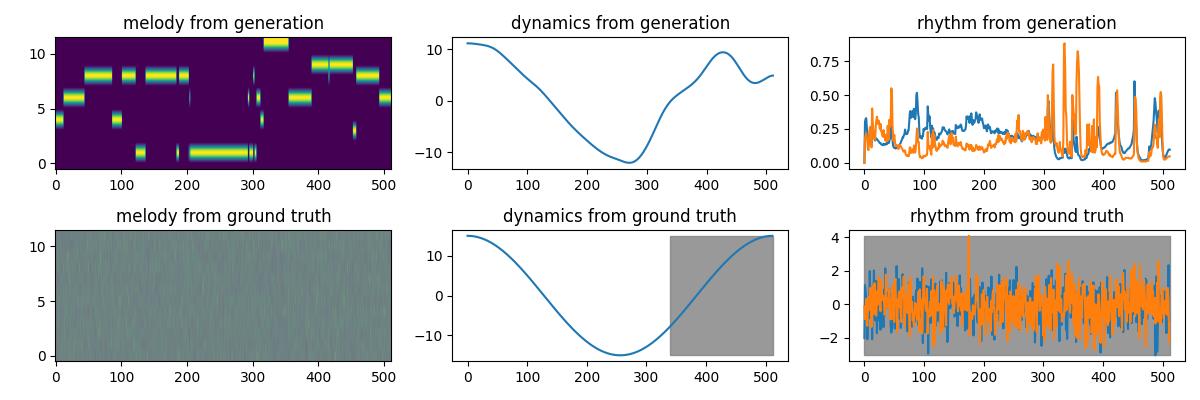

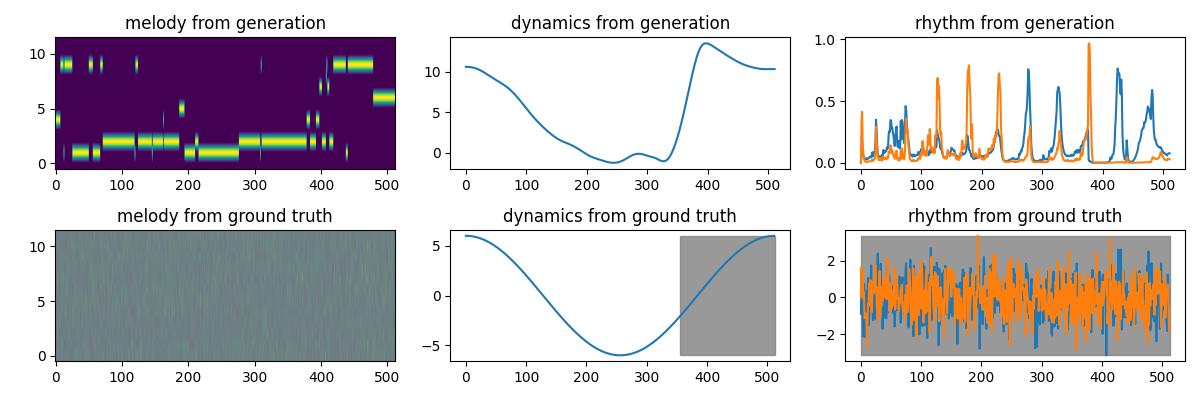

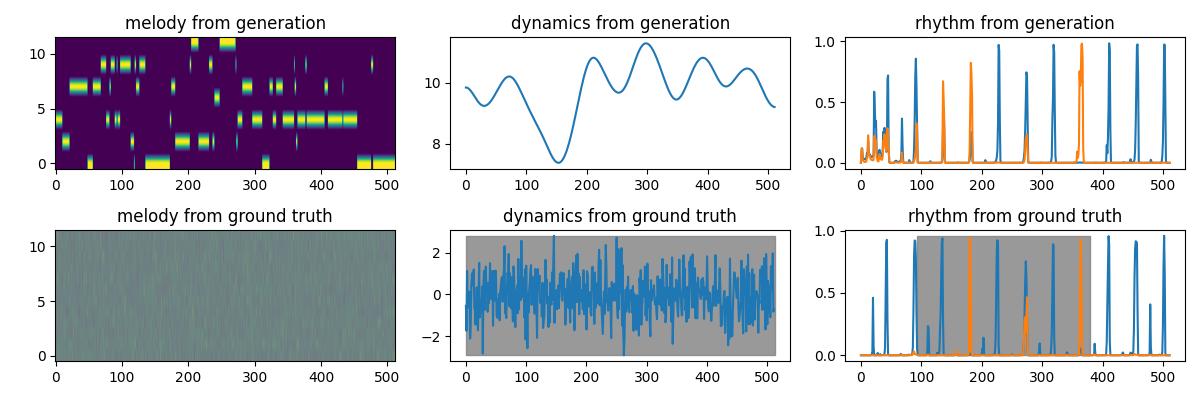

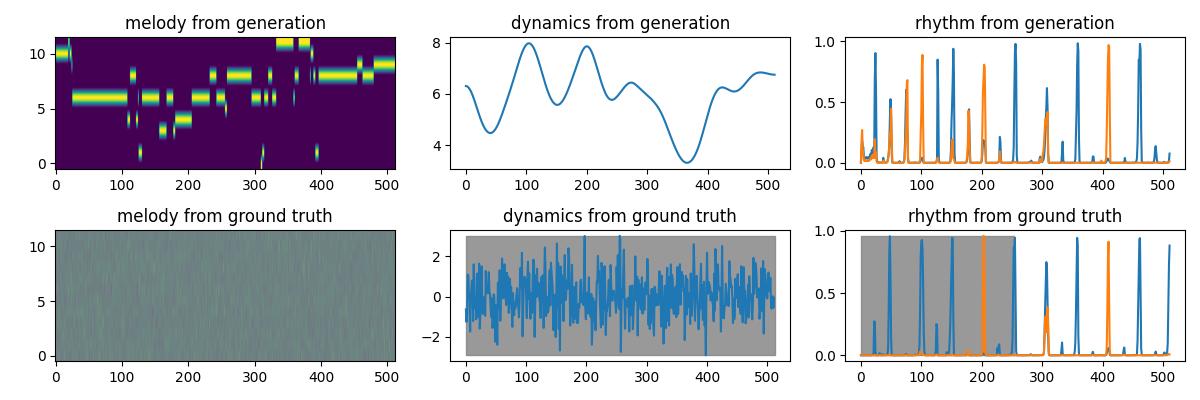

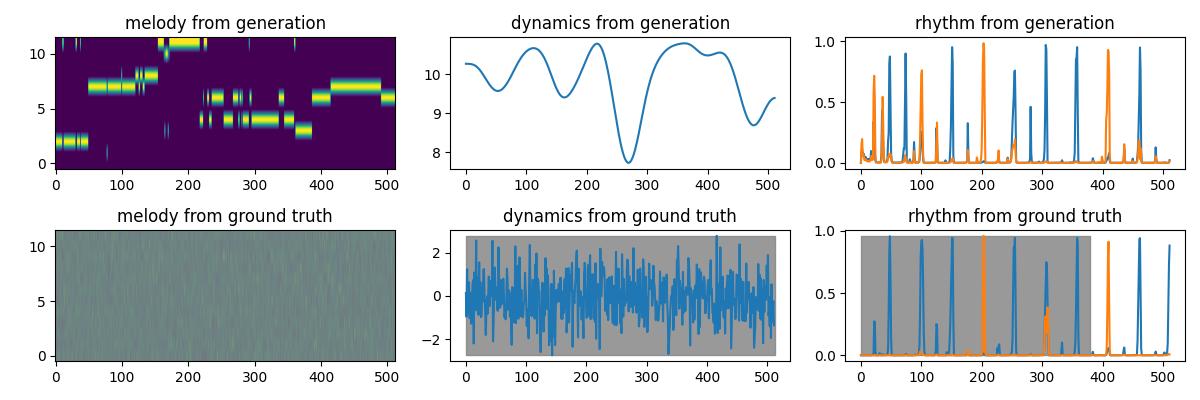

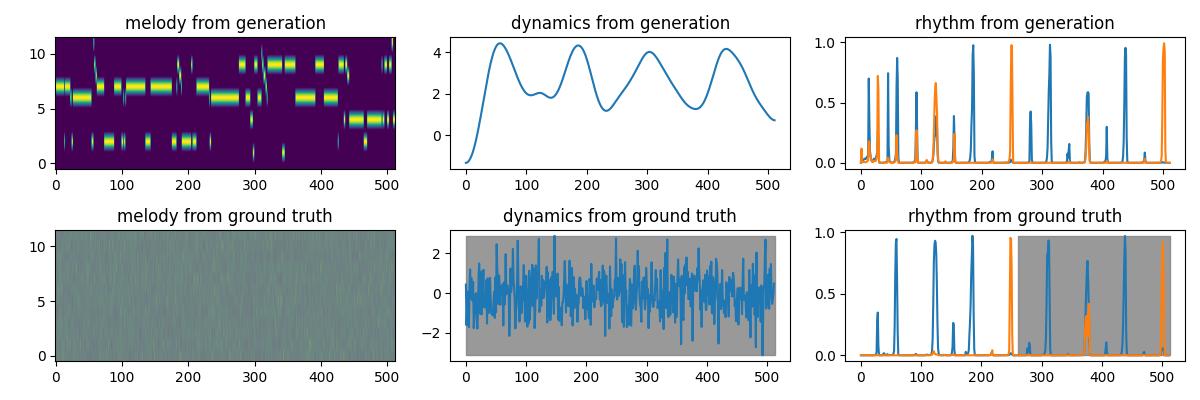

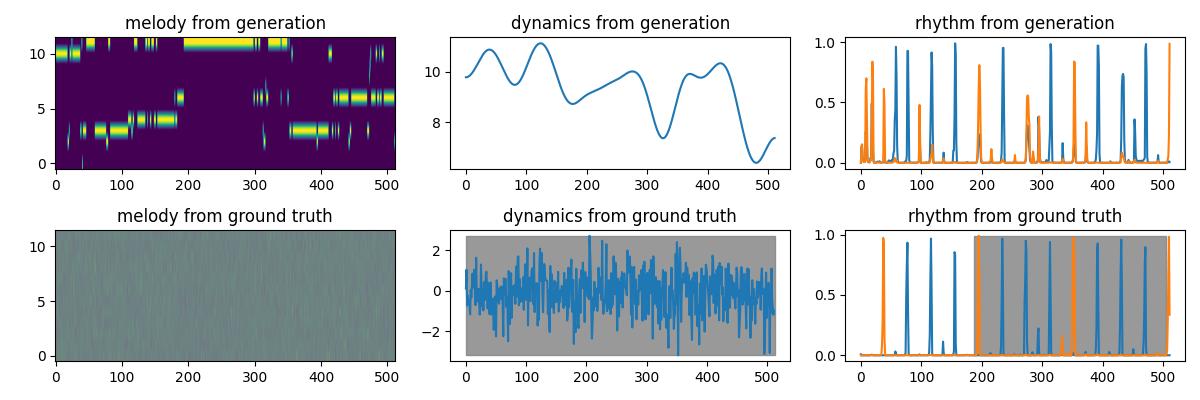

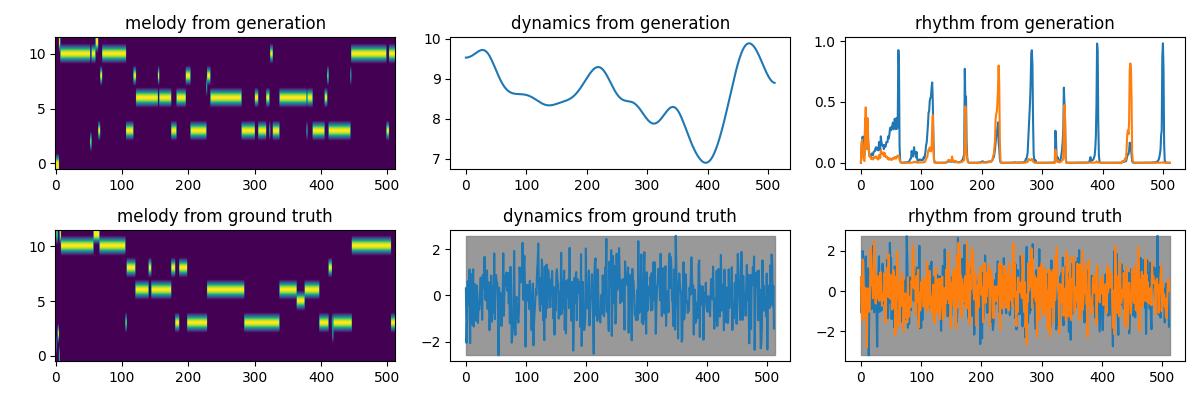

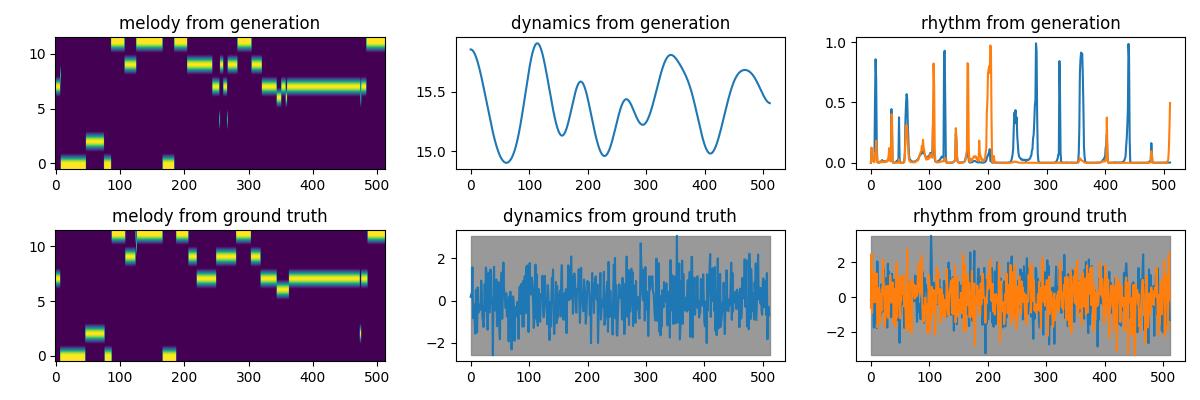

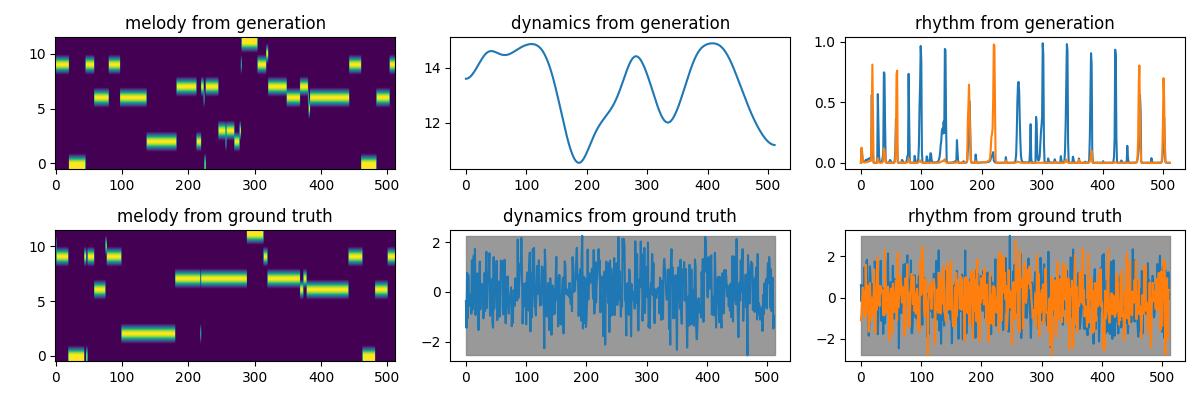

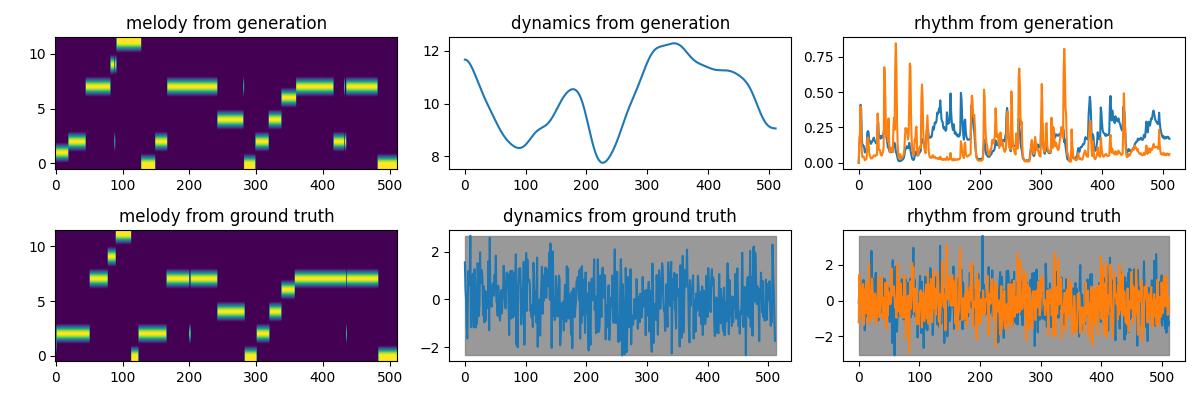

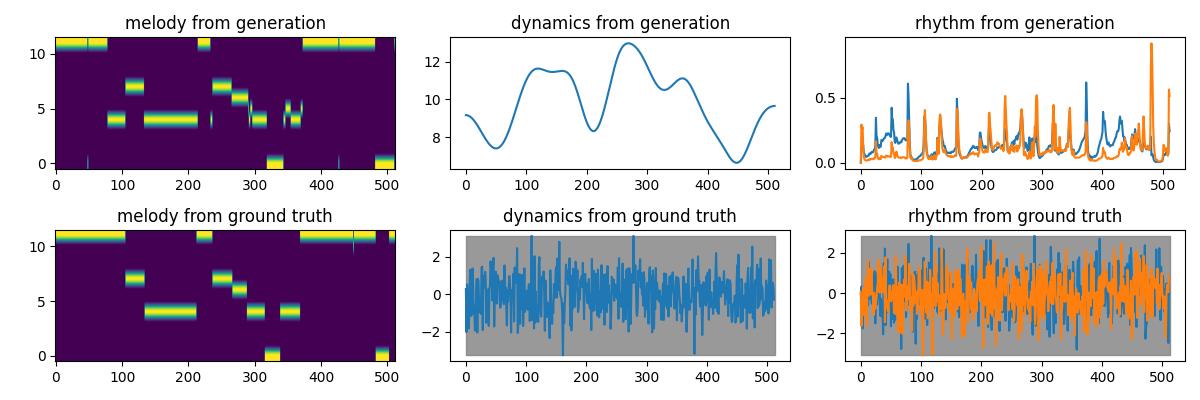

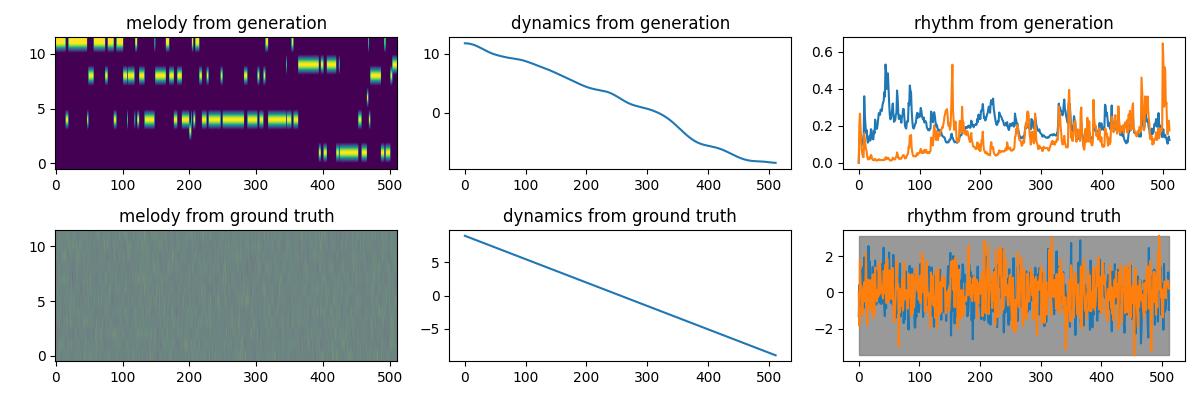

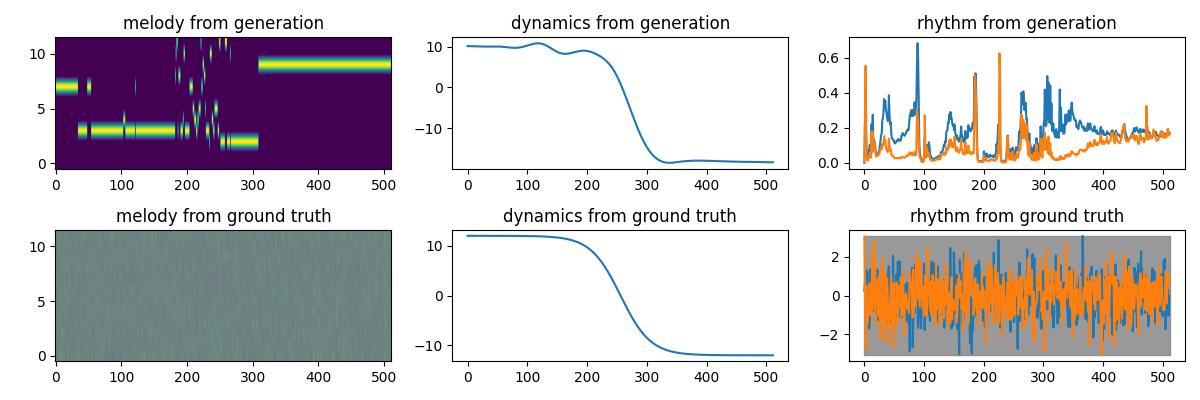

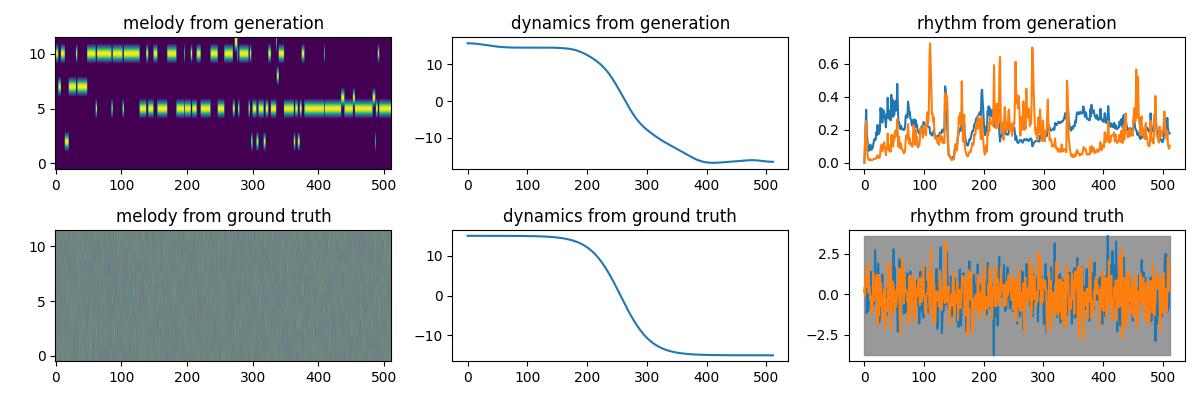

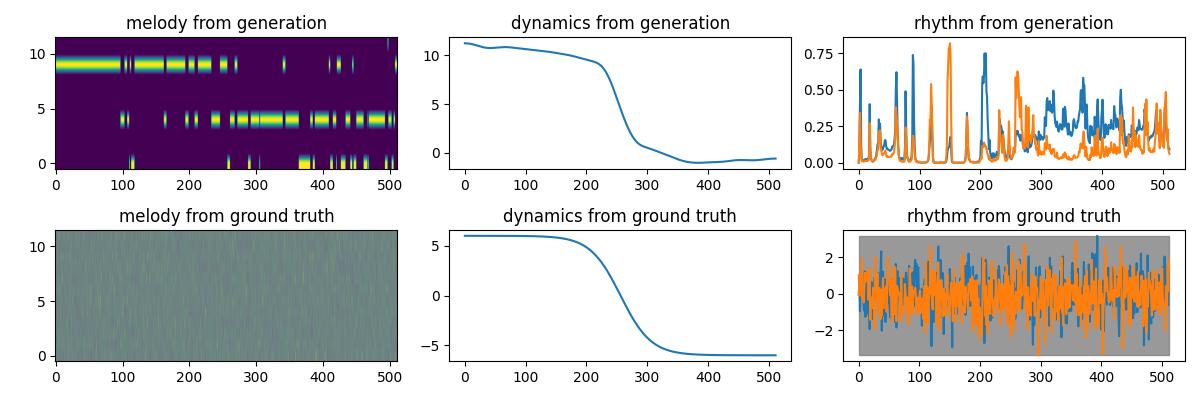

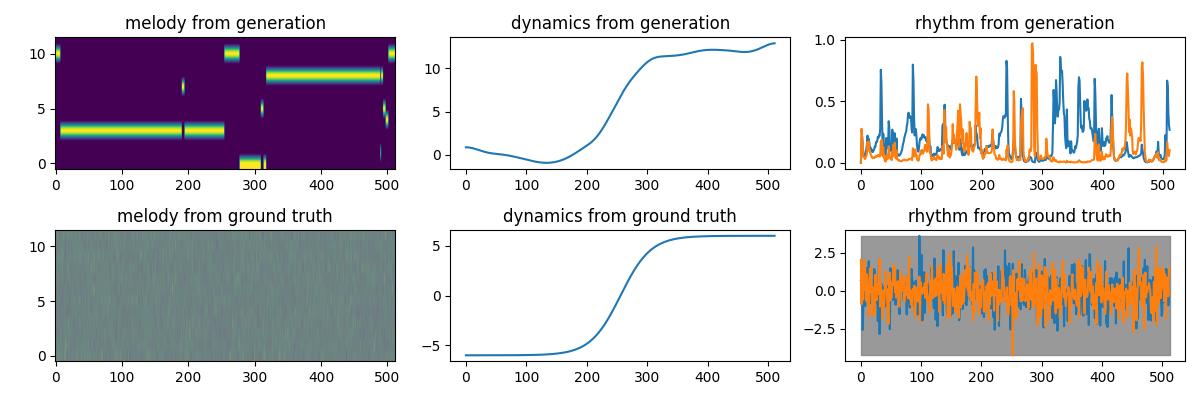

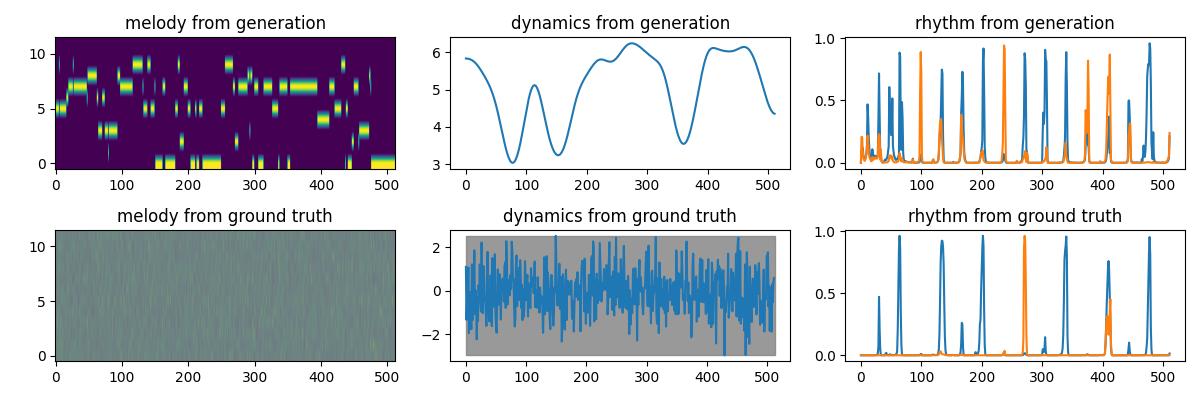

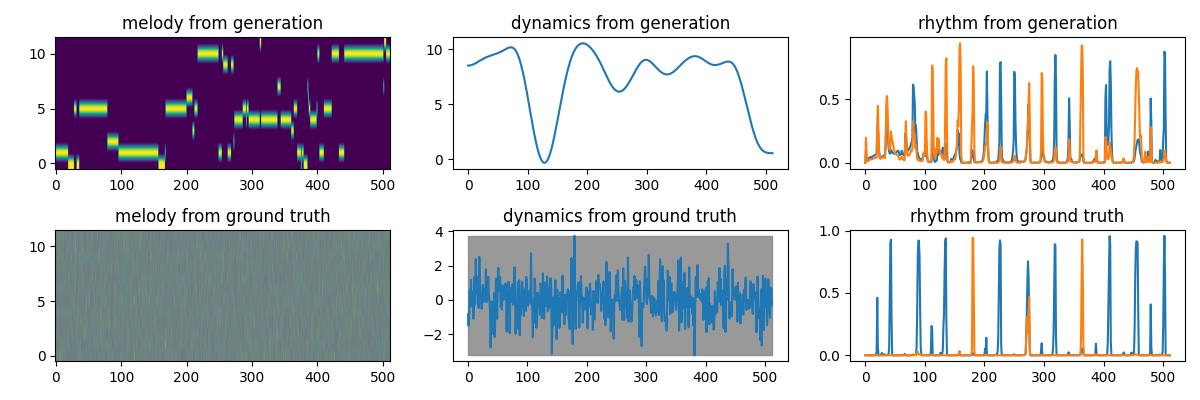

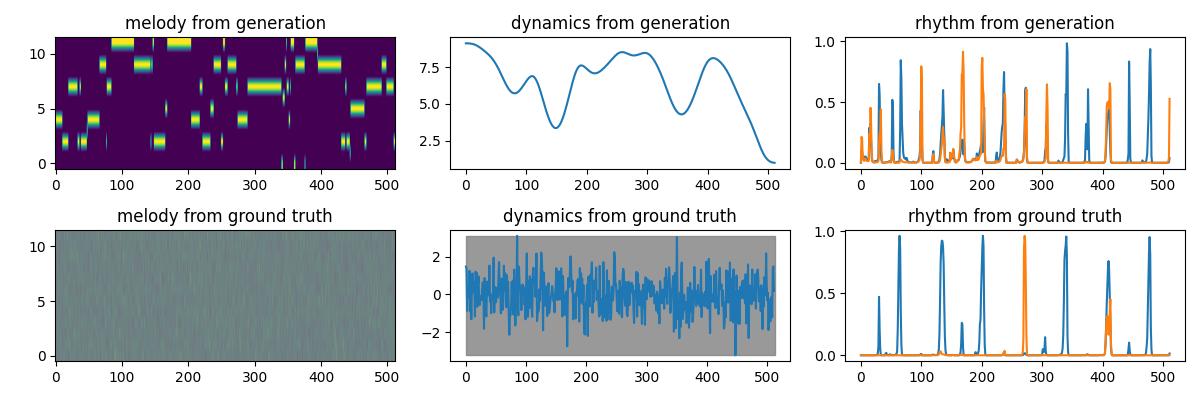

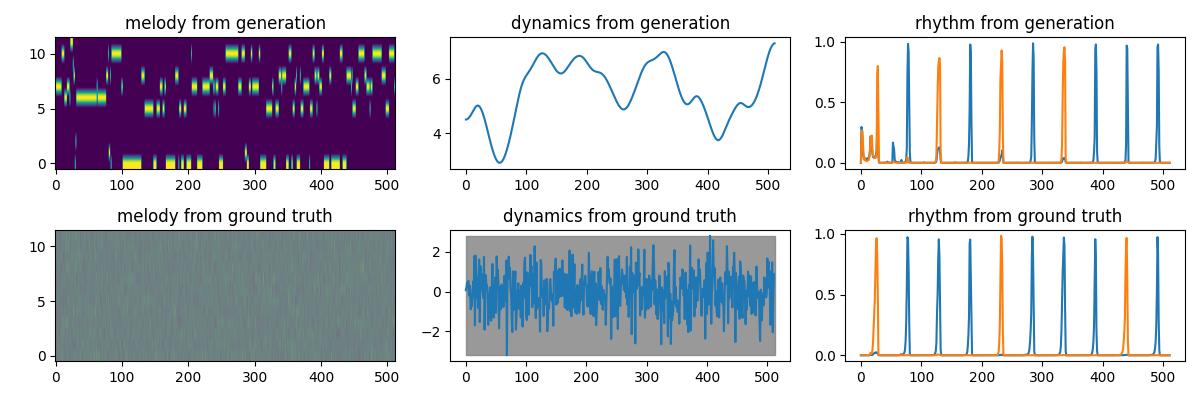

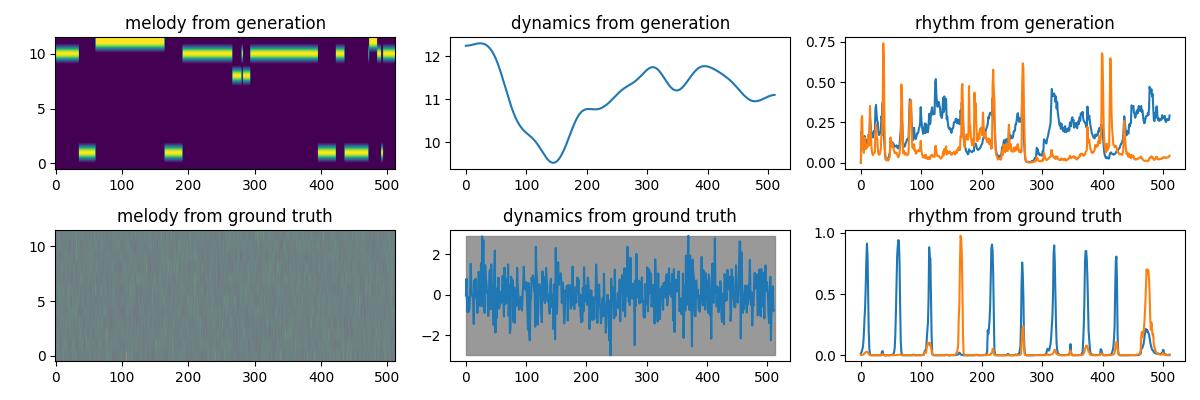

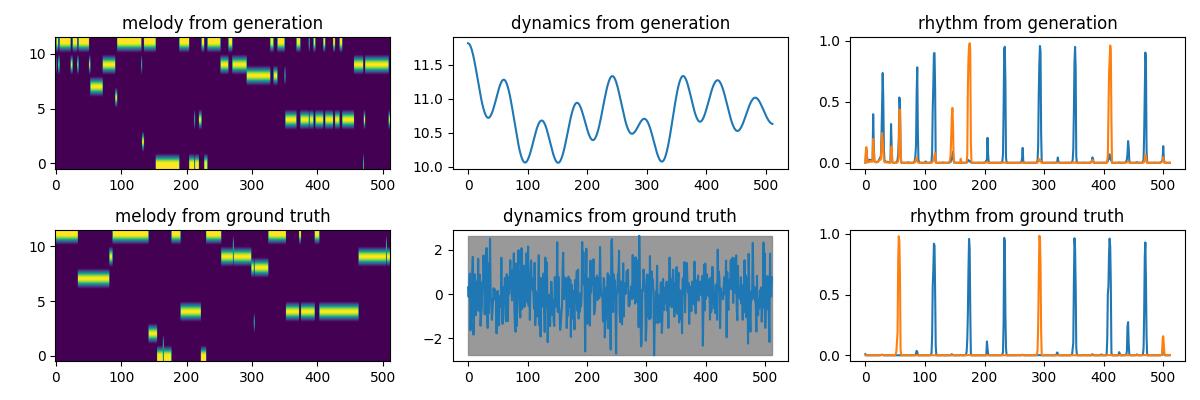

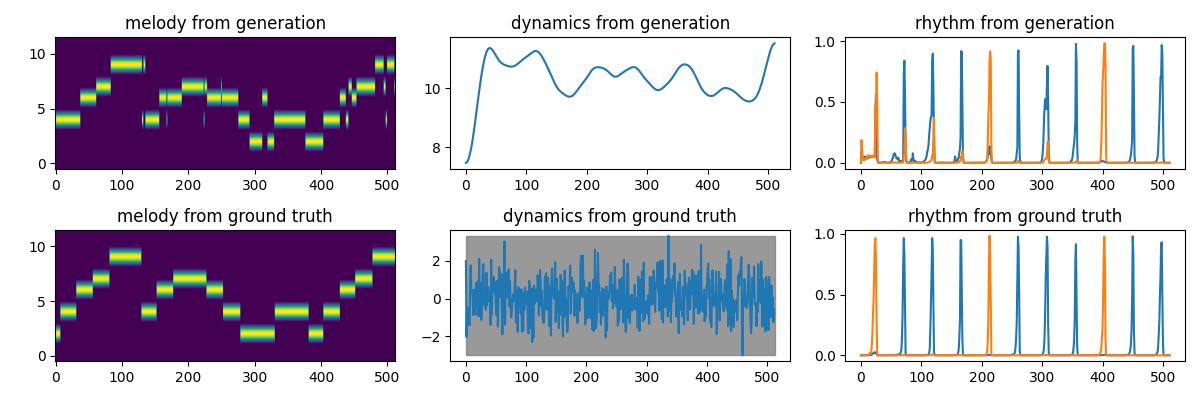

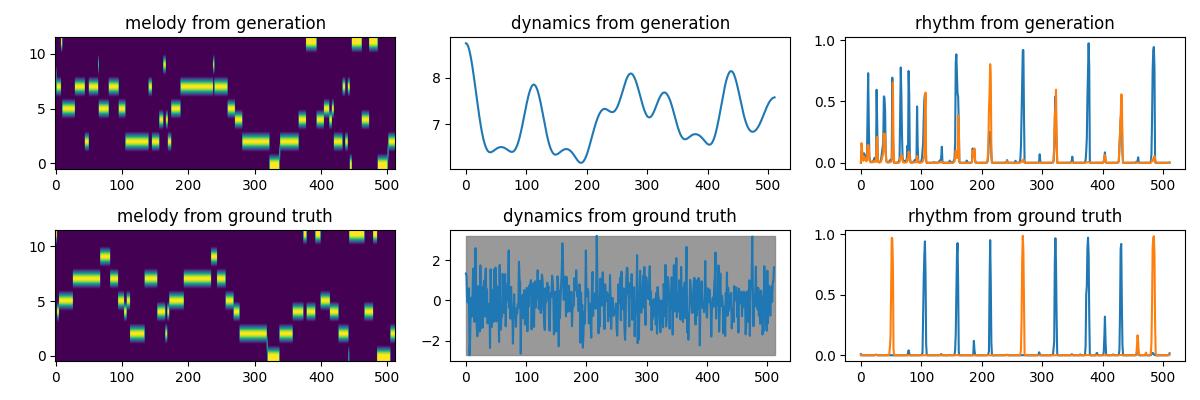

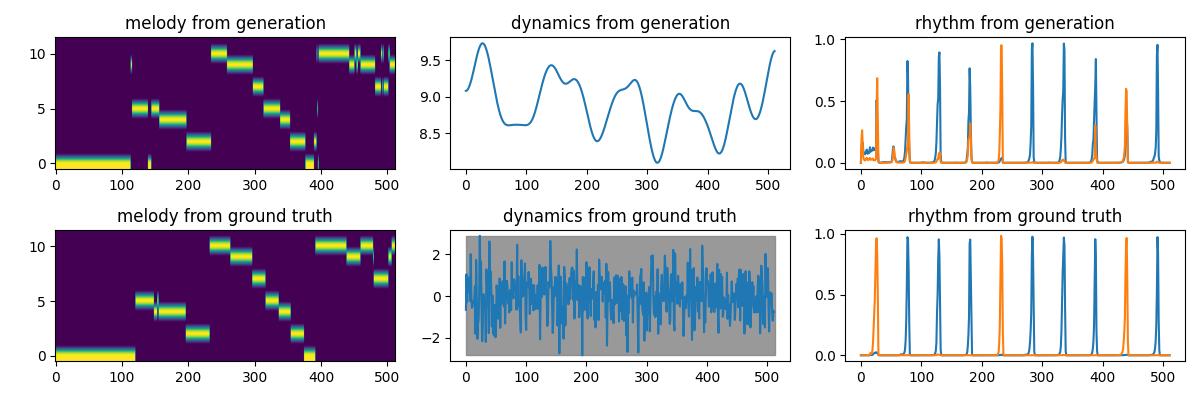

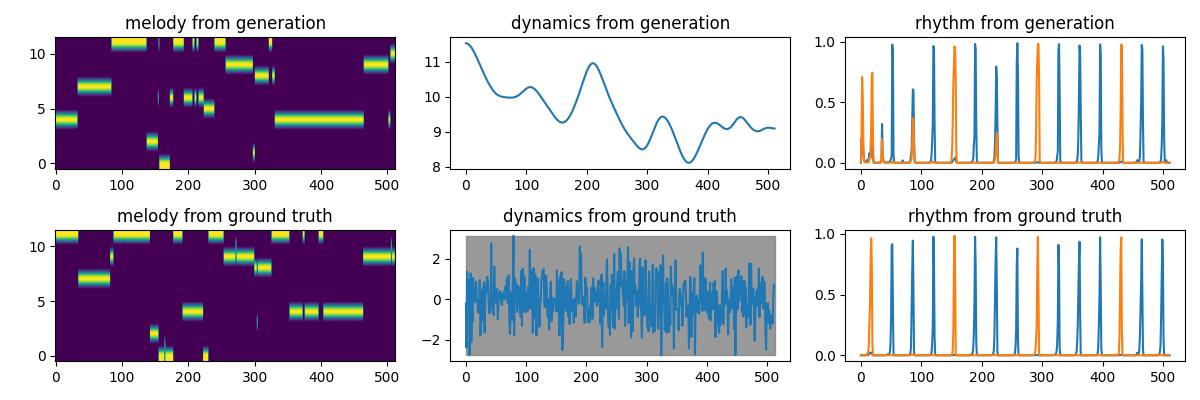

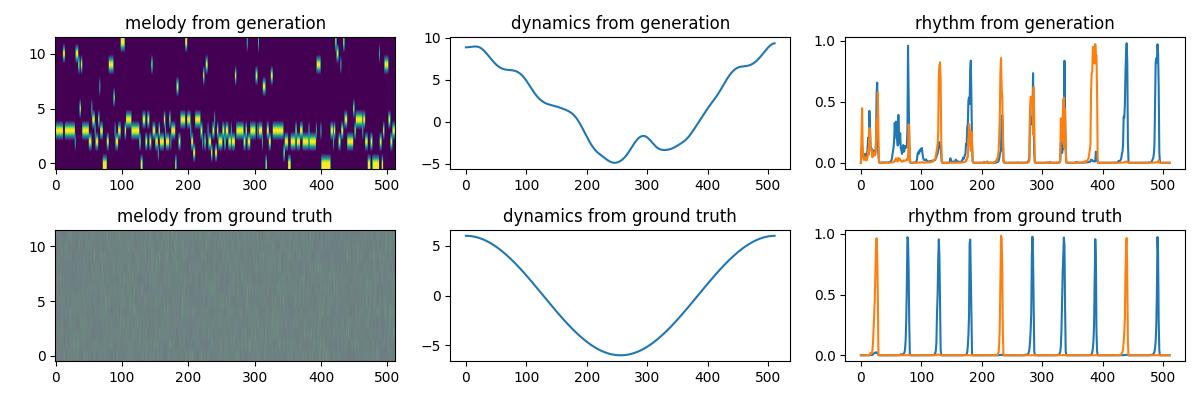

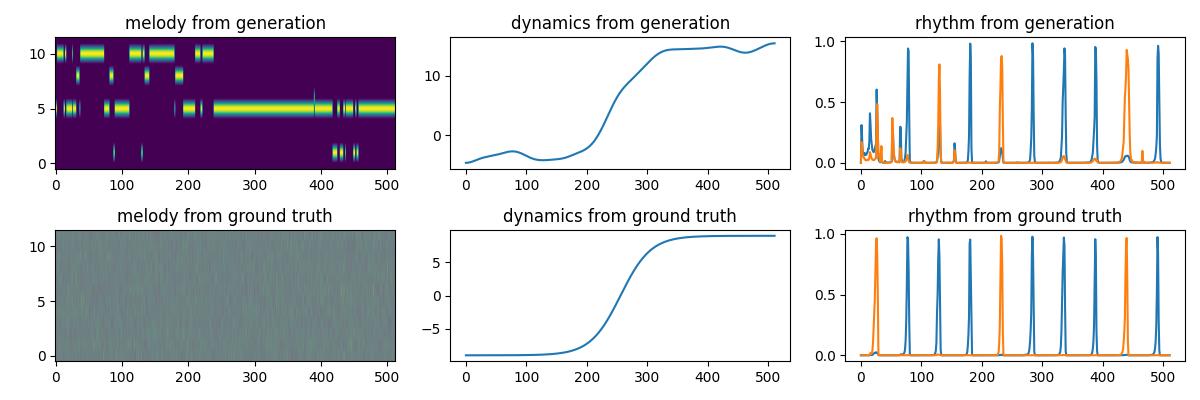

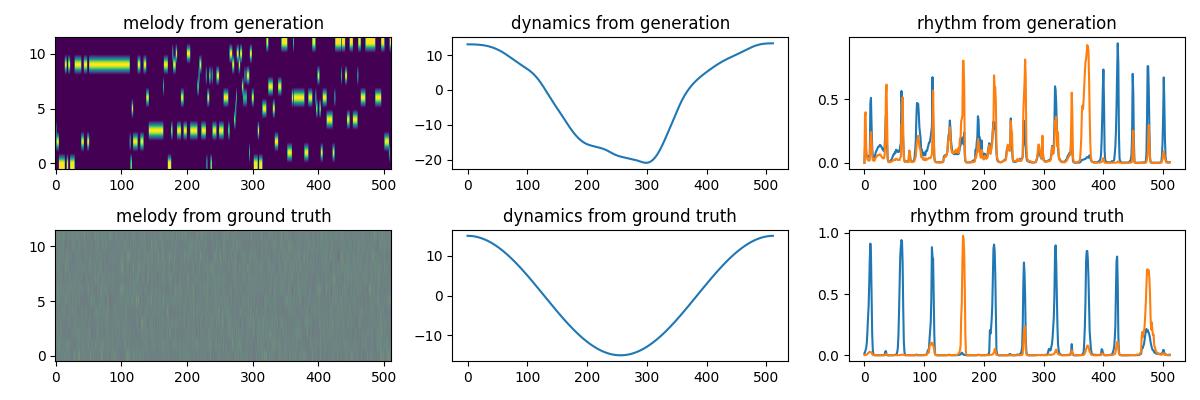

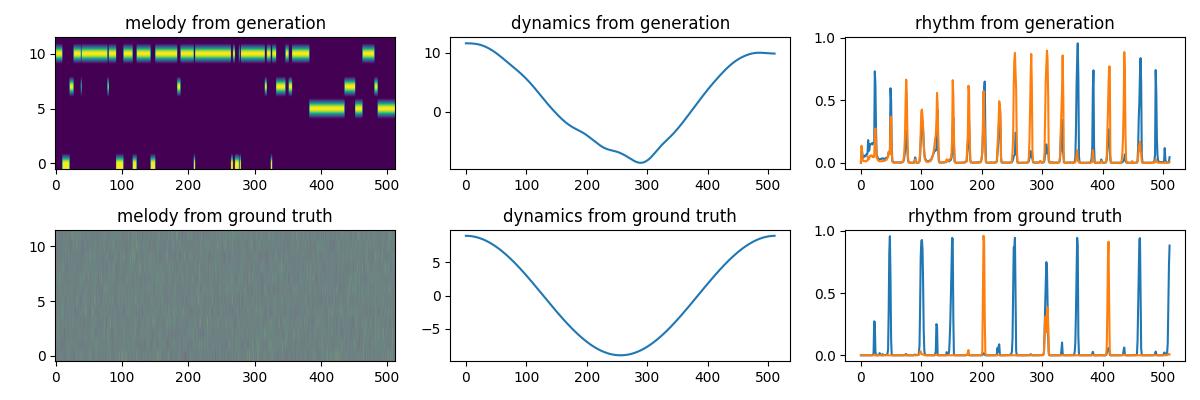

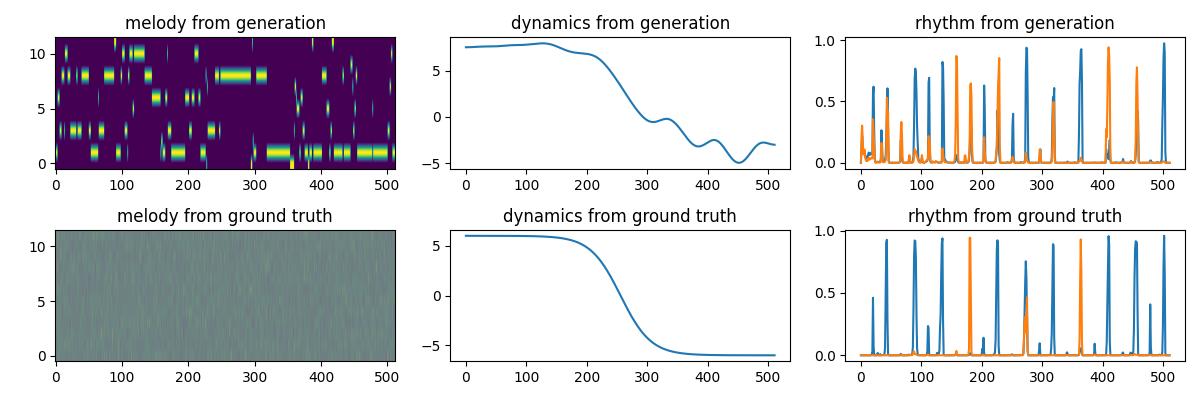

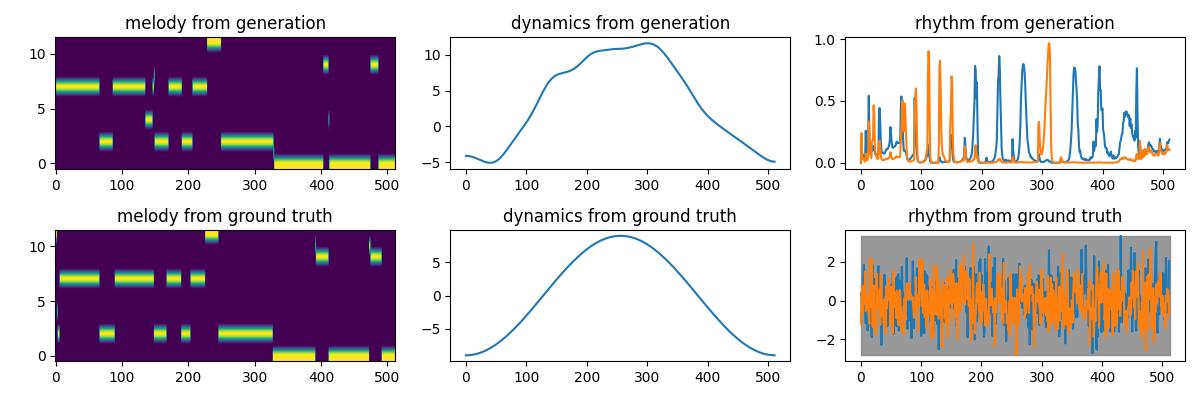

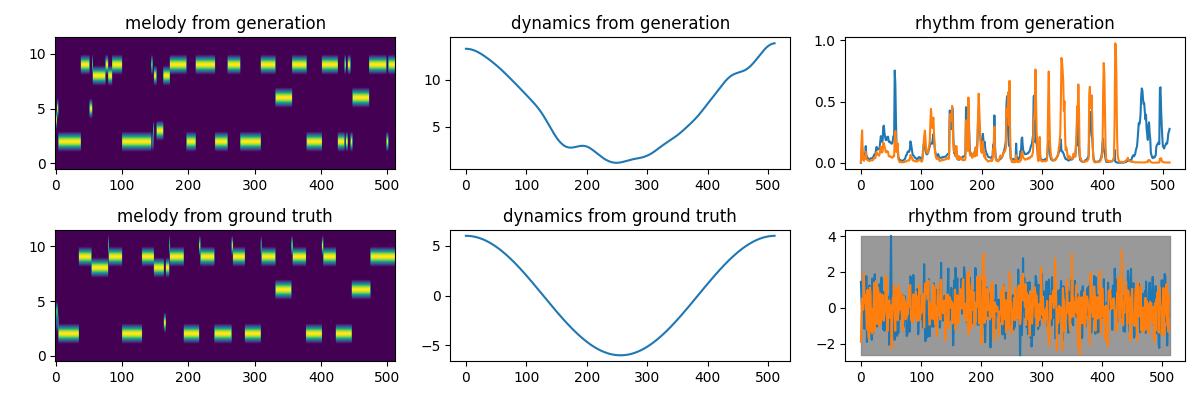

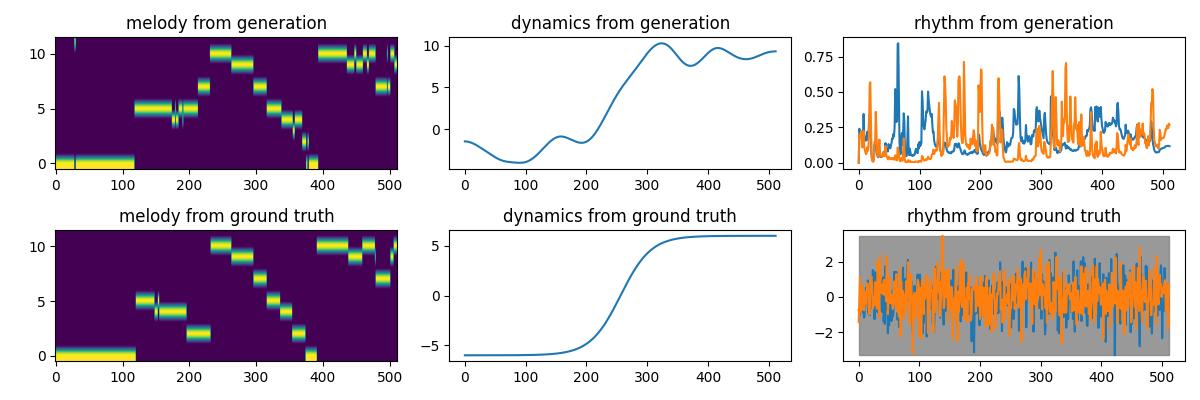

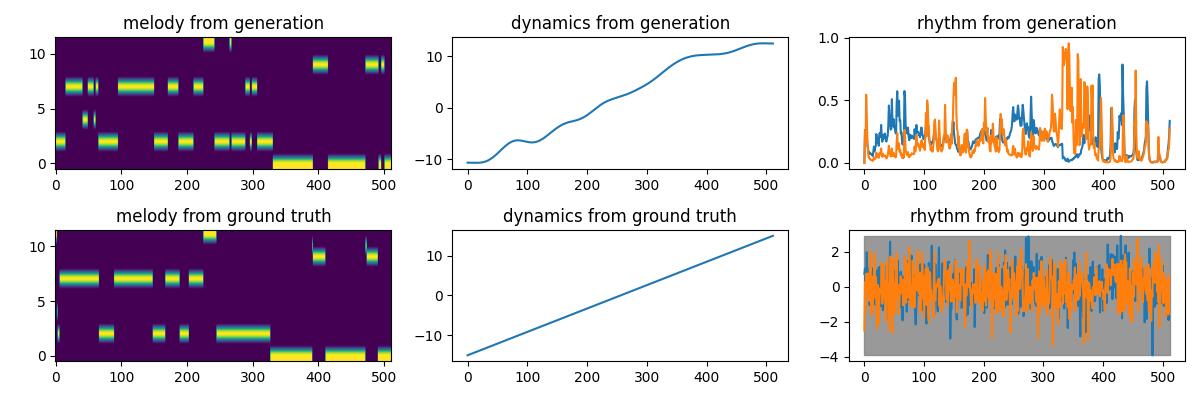

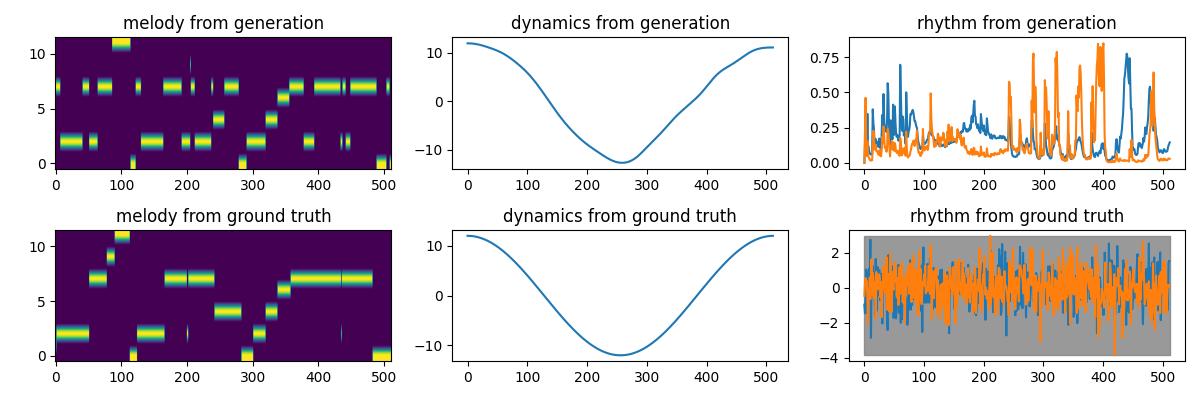

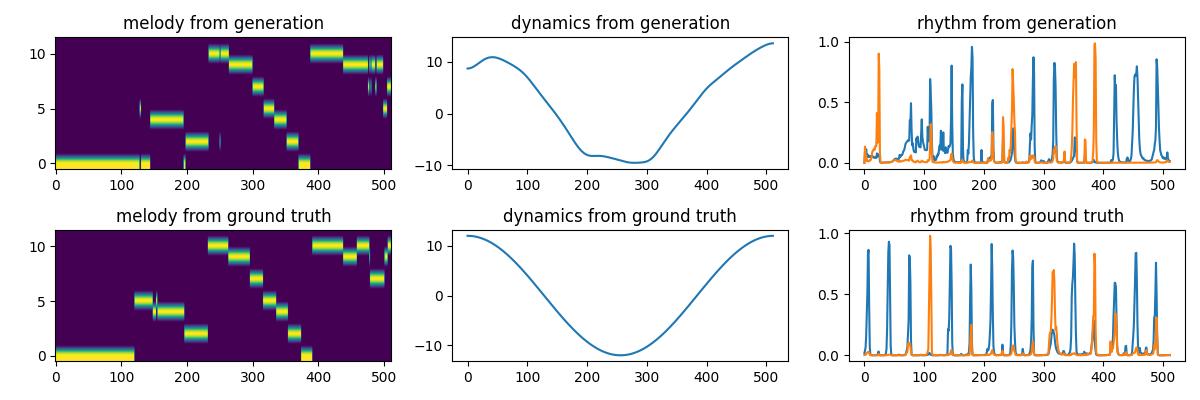

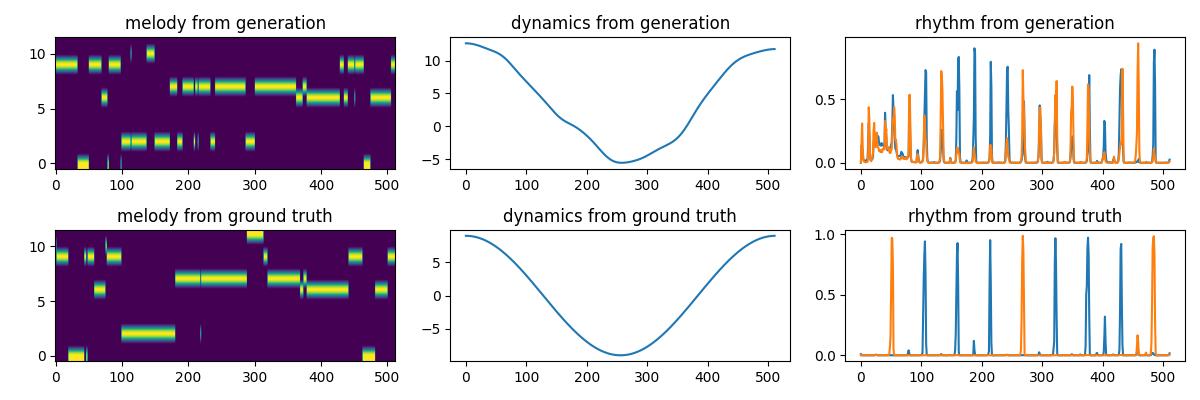

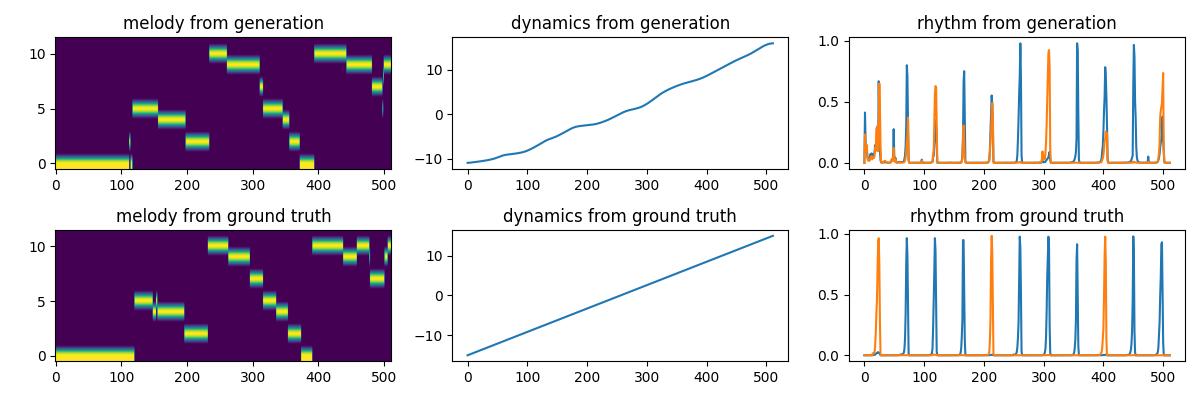

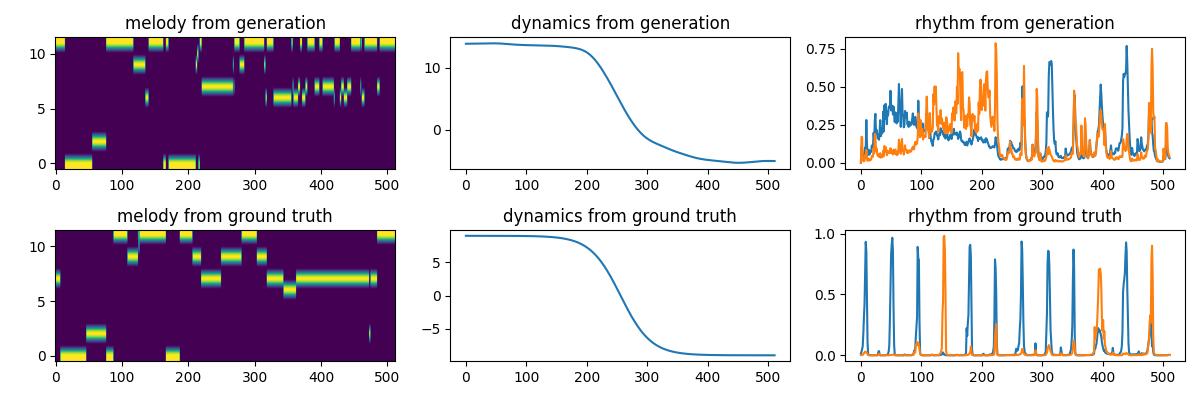

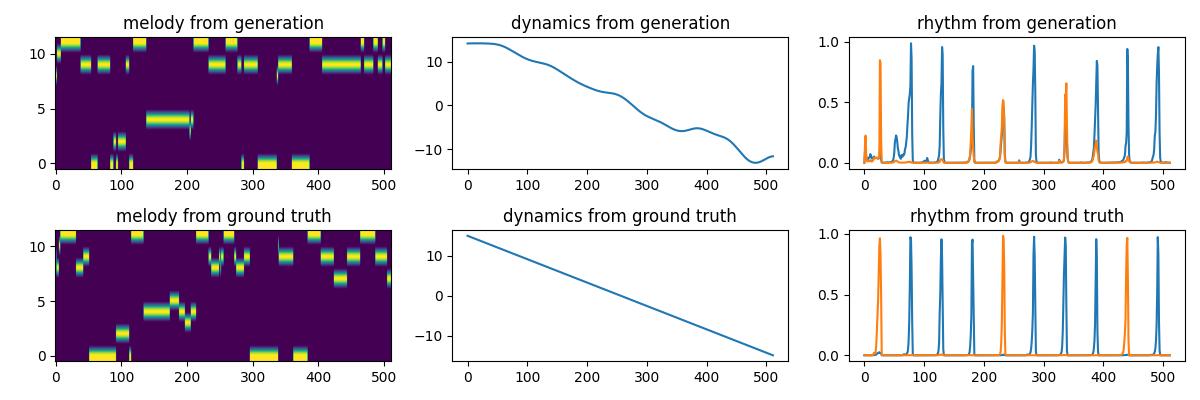

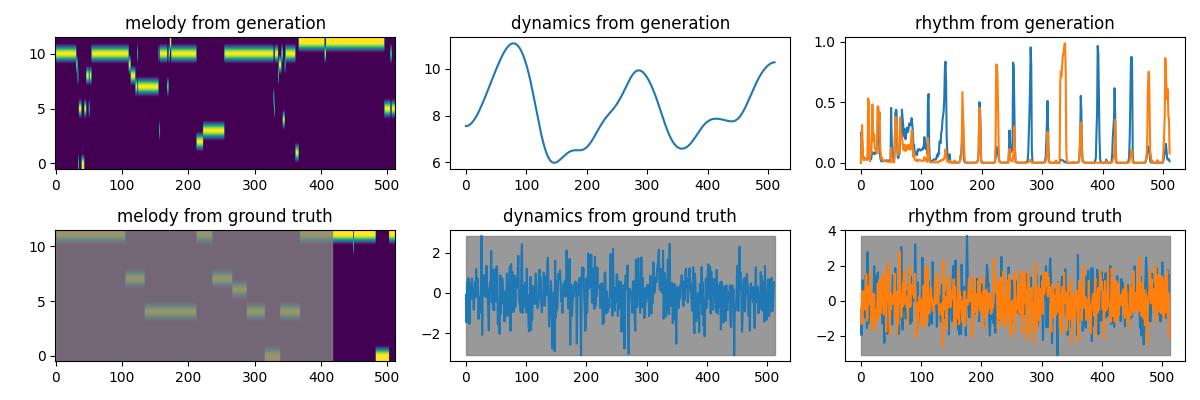

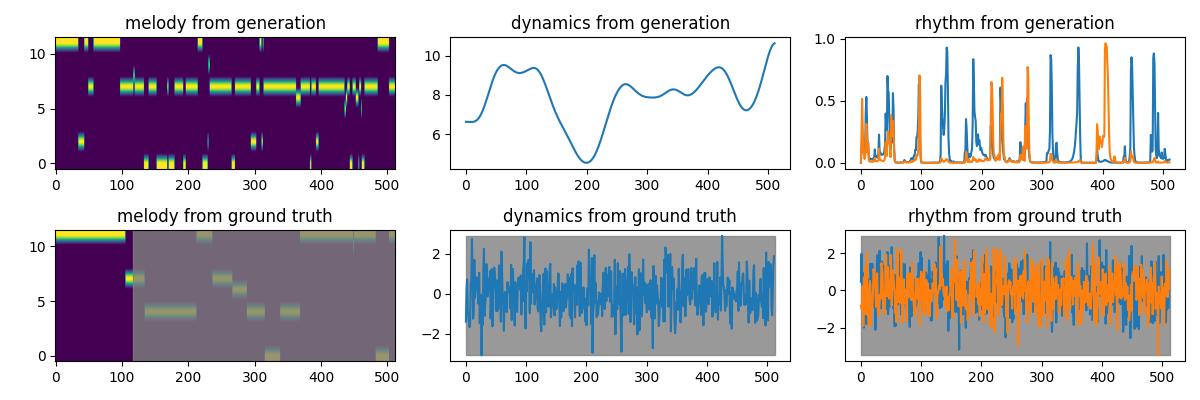

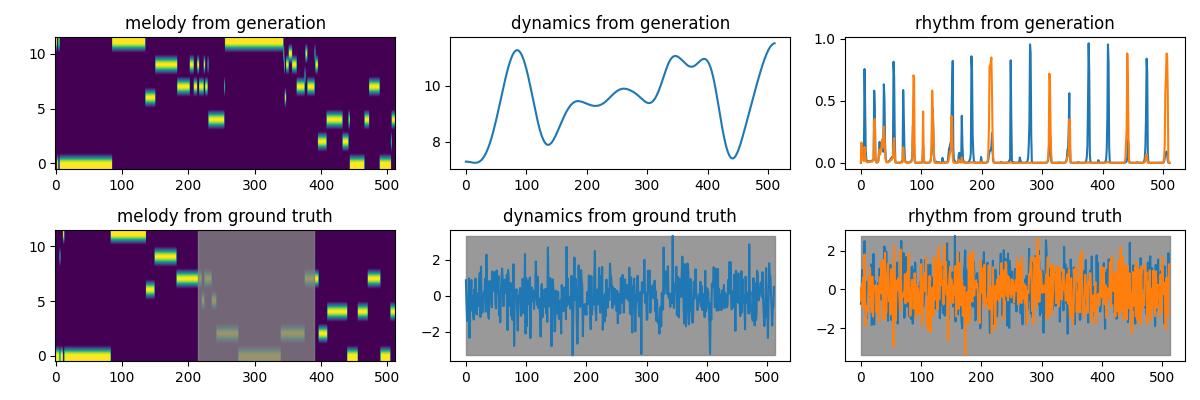

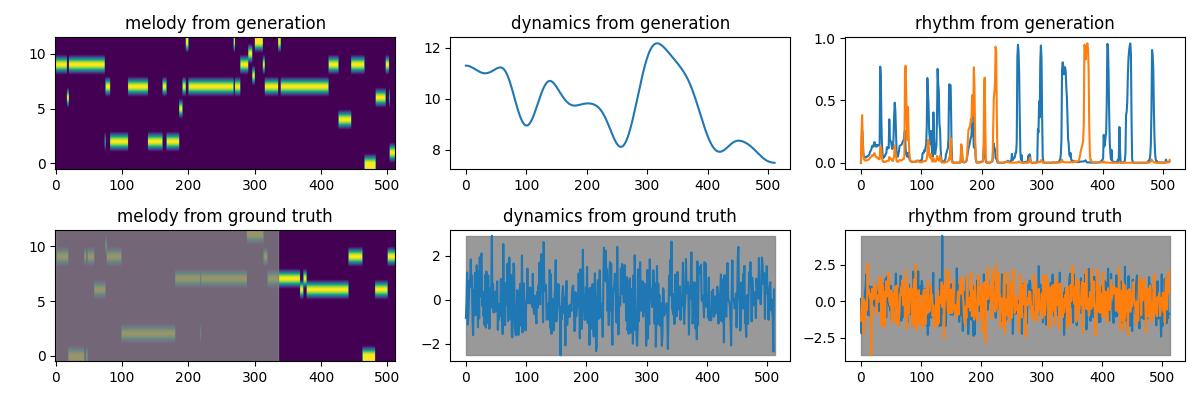

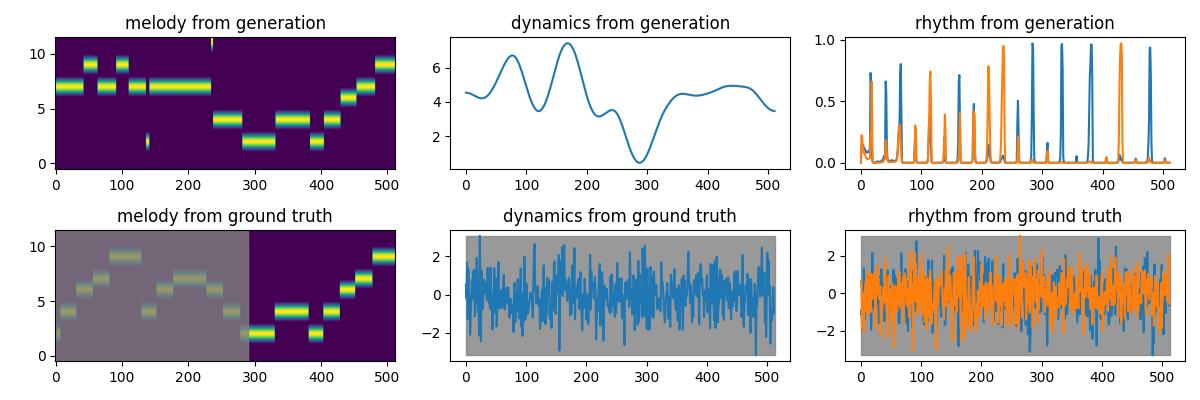

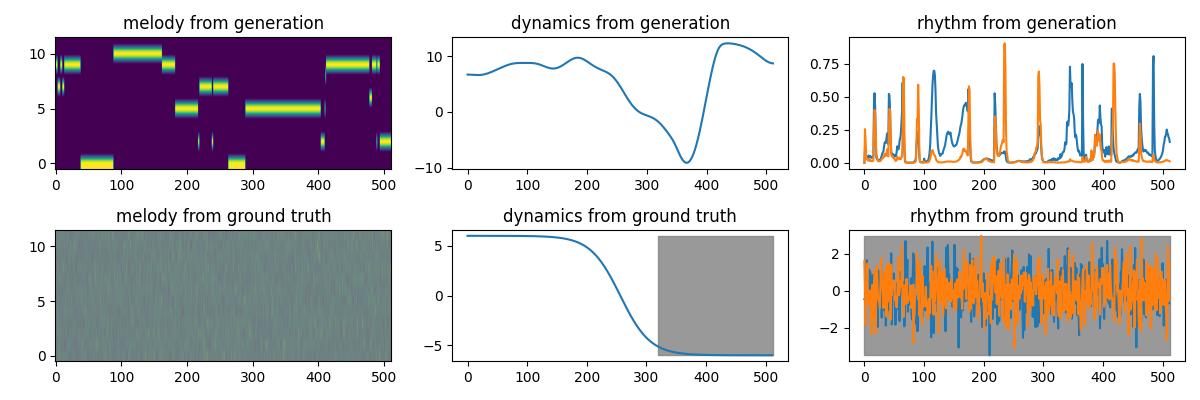

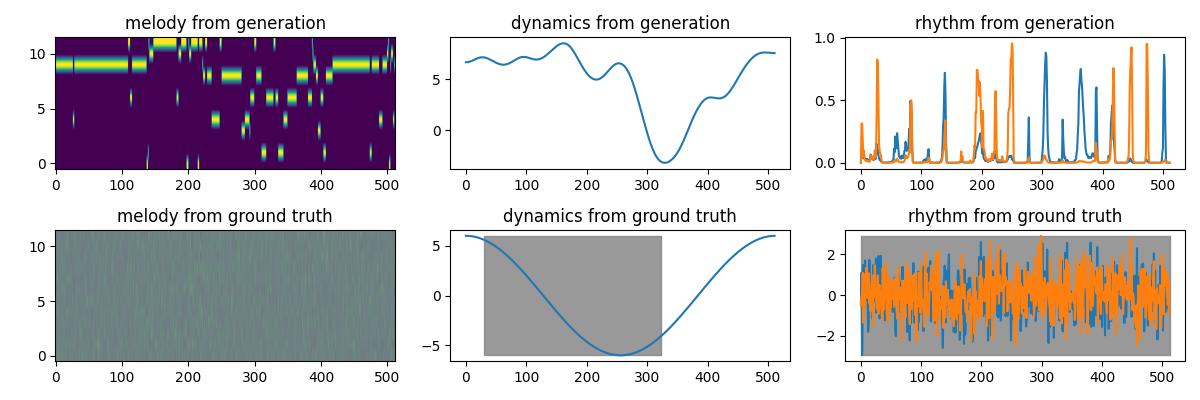

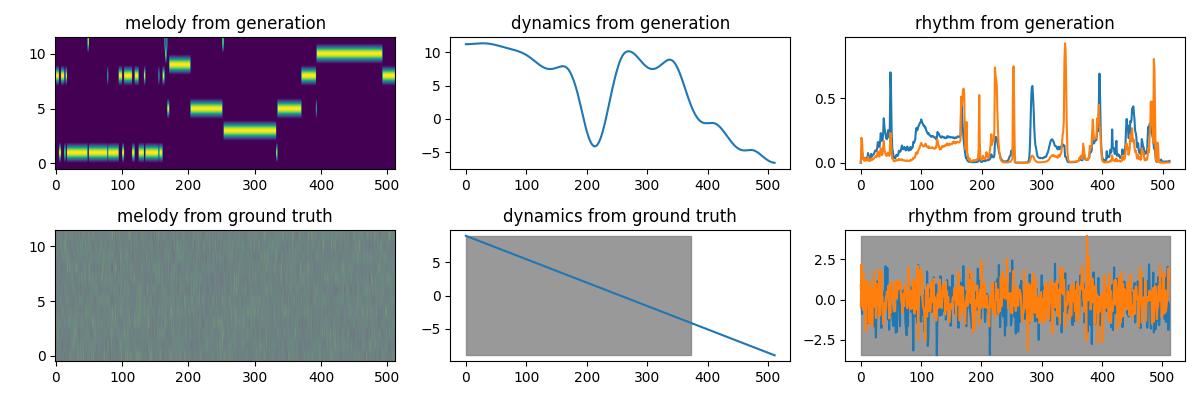

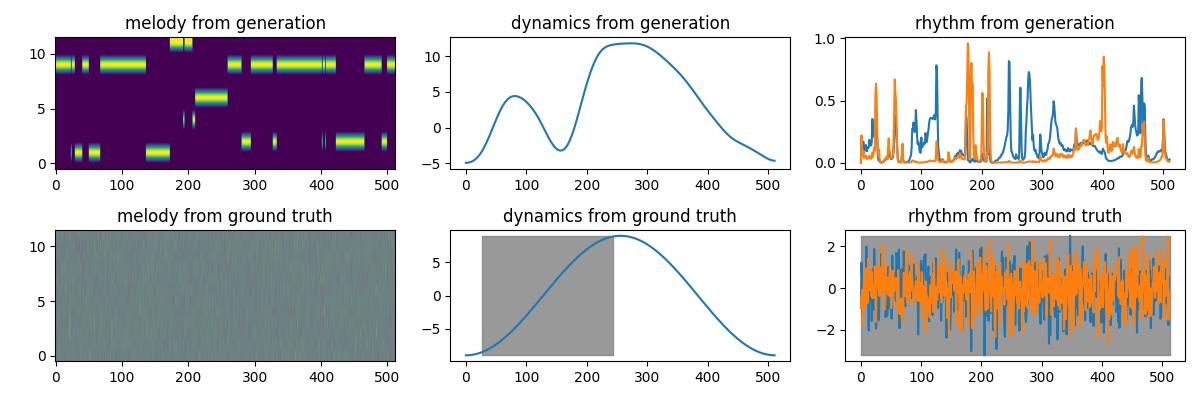

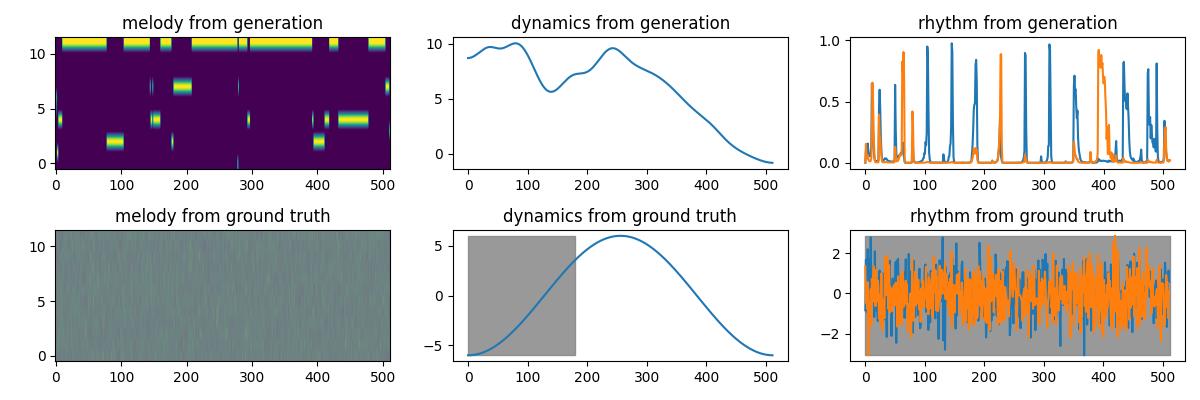

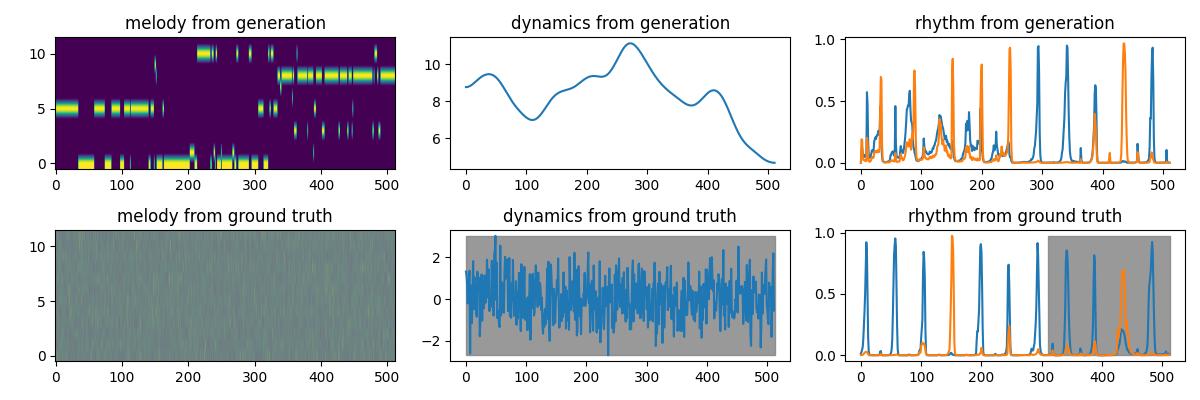

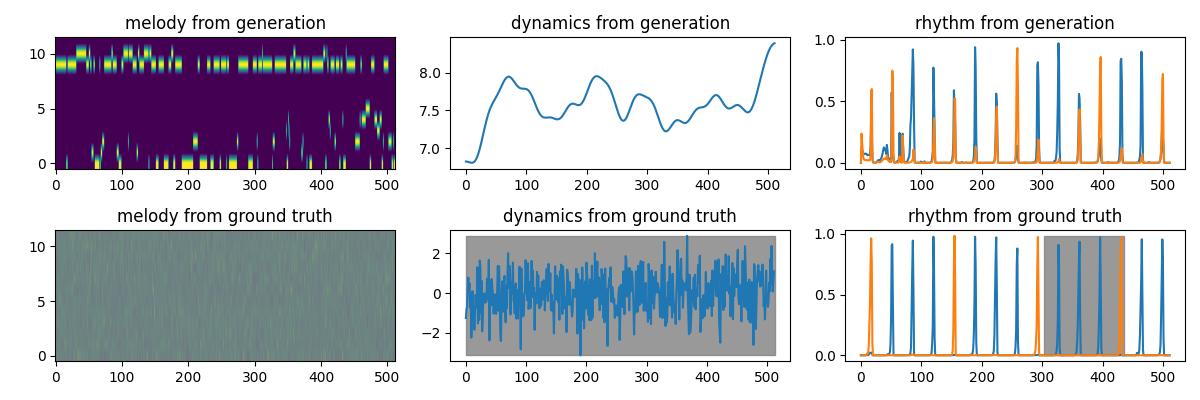

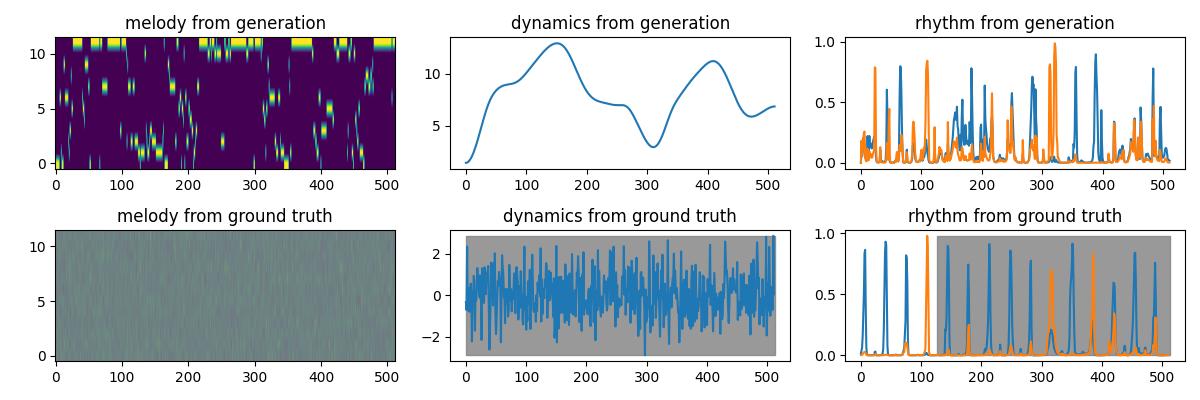

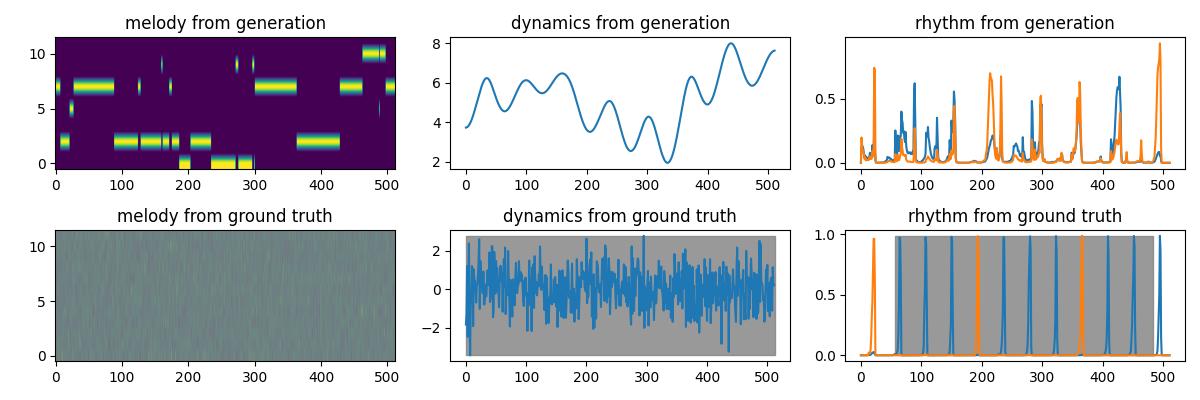

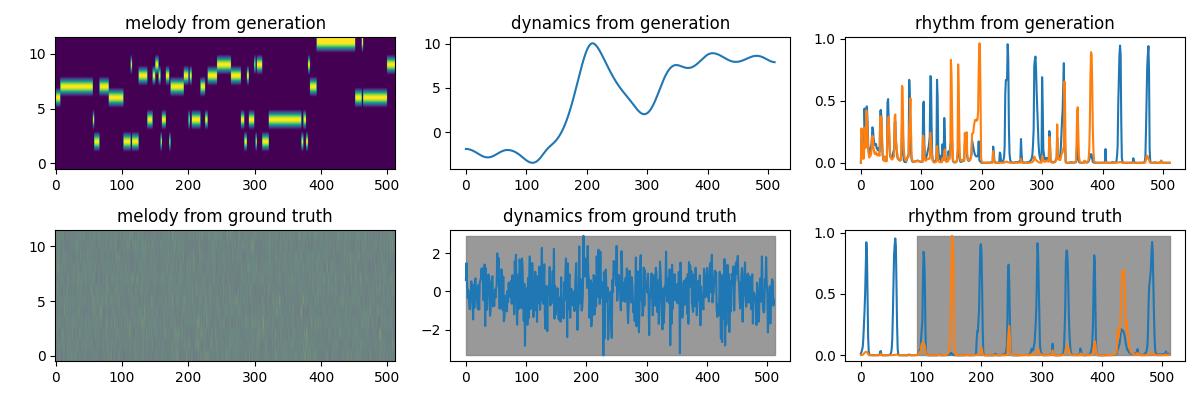

Please find generated music and feature plots with different control combinations including melody, dynamics, and rhythm, and their combinations as well as partially-specified controls over time. The examples here are mildly cherry-picked to show the best results. For random (non-cherry-picked) examples, please see the section below.For each example, the first row of plots are controls extracted from generation, and the second row ones are input controls. Examples with gray shaded regions denote partially-specified controls, where the gray region is not enforced. A melody reference file is also provided for examples that includes melody control.

Melody Control |

|||||||||||||||||||||||

Reference |

Generated Music |

Text |

Feature Plots |

||||||||||||||||||||

|

happy, jazz |

|

||||||||||||||||||||||

|

sexy, pop |

|

||||||||||||||||||||||

|

happy, electronic |

|

||||||||||||||||||||||

|

sexy, electronic |

|

||||||||||||||||||||||

|

happy, acoustic |

|

||||||||||||||||||||||

Dynamics Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

happy, rock |

|

||||||||||||||||||||||

|

sexy, acoustic |

|

||||||||||||||||||||||

|

sexy, classical |

|

||||||||||||||||||||||

|

inspiring, electronic |

|

||||||||||||||||||||||

|

nostalgic, pop |

|

||||||||||||||||||||||

Rhythm Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

happy, classical |

|

||||||||||||||||||||||

|

happy, rock |

|

||||||||||||||||||||||

|

inspiring, jazz |

|

||||||||||||||||||||||

|

mellow, hip-hop |

|

||||||||||||||||||||||

|

mellow, world |

|

||||||||||||||||||||||

Melody & Rhythm Control |

|||||||||||||||||||||||

Reference |

Generated Music |

Text |

Feature Plots |

||||||||||||||||||||

|

happy, electronic |

|

||||||||||||||||||||||

|

sad, jazz |

|

||||||||||||||||||||||

|

mellow, acoustic |

|

||||||||||||||||||||||

|

sexy, world |

|

||||||||||||||||||||||

|

sad, country |

|

||||||||||||||||||||||

Dynamics & Rhythm Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

happy, acoustic |

|

||||||||||||||||||||||

|

sexy, r&b |

|

||||||||||||||||||||||

|

powerful, rock |

|

||||||||||||||||||||||

|

mellow, acoustic |

|

||||||||||||||||||||||

|

happy, electronic |

|

||||||||||||||||||||||

Melody & Dynamics Control |

|||||||||||||||||||||||

Reference |

Generated Music |

Text |

Feature Plots |

||||||||||||||||||||

|

happy, rock |

|

||||||||||||||||||||||

|

powerful, electronic |

|

||||||||||||||||||||||

|

inspiring, classical |

|

||||||||||||||||||||||

|

angry, country |

|

||||||||||||||||||||||

|

powerful, country |

|

||||||||||||||||||||||

Melody, Dynamics, & Rhythm Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

happy, jazz |

|

||||||||||||||||||||||

|

angry, rock |

|

||||||||||||||||||||||

|

dramatic, electronic |

|

||||||||||||||||||||||

|

sexy, pop |

|

||||||||||||||||||||||

|

drmatic, hip-hop |

|

||||||||||||||||||||||

Partial Melody Control |

|||||||||||||||||||||||

Reference |

Generated Music |

Text |

Feature Plots |

||||||||||||||||||||

|

happy, jazz |

|

||||||||||||||||||||||

|

atmospheric, electronic |

|

||||||||||||||||||||||

|

powerful, hip-hop |

|

||||||||||||||||||||||

|

mellow, world |

|

||||||||||||||||||||||

|

mellow, jazz |

|

||||||||||||||||||||||

Partial Dynamics Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

happy, acoustic |

|

||||||||||||||||||||||

|

sexy, classical |

|

||||||||||||||||||||||

|

happy, r&b |

|

||||||||||||||||||||||

|

mellow, world |

|

||||||||||||||||||||||

|

inspiring, acoustic |

|

||||||||||||||||||||||

Partial Rhythm Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

happy, pop |

|

||||||||||||||||||||||

|

sexy, acoustic |

|

||||||||||||||||||||||

|

mellow, jazz |

|

||||||||||||||||||||||

|

powerful, acoustic |

|

||||||||||||||||||||||

|

inspiring, r&b |

|

||||||||||||||||||||||

Examples (Random)

Please find generated music and feature plots with different control combinations including melody, dynamics, and rhythm, and their combinations as well as partially-specified controls over time. The examples here randomly generated.For each example, the first row of plots are controls extracted from generation, and the second row ones are input controls. Examples with gray shaded regions denote partially-specified controls, where the gray region is not enforced. A melody reference file is also provided for examples that includes melody control.

Melody Control |

|||||||||||||||||||||||

Reference |

Generated Music |

Text |

Feature Plots |

||||||||||||||||||||

|

atmospheric, rock |

|

||||||||||||||||||||||

|

angry, hip-hop |

|

||||||||||||||||||||||

|

sad, hip-hop |

|

||||||||||||||||||||||

|

mellow, classical |

|

||||||||||||||||||||||

|

angry, acoustic |

|

||||||||||||||||||||||

Dynamics Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

sexy, classical |

|

||||||||||||||||||||||

|

angry, country |

|

||||||||||||||||||||||

|

happy, classical |

|

||||||||||||||||||||||

|

mellow, electronic |

|

||||||||||||||||||||||

|

happy, country |

|

||||||||||||||||||||||

Rhythm Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

powerful, world |

|

||||||||||||||||||||||

|

mellow, jazz |

|

||||||||||||||||||||||

|

sexy, country |

|

||||||||||||||||||||||

|

sad, hip-hop |

|

||||||||||||||||||||||

|

inspiring, hip-hop |

|

||||||||||||||||||||||

Melody & Rhythm Control |

|||||||||||||||||||||||

Reference |

Generated Music |

Text |

Feature Plots |

||||||||||||||||||||

|

atmospheric, pop |

|

||||||||||||||||||||||

|

dramatic, acoustic |

|

||||||||||||||||||||||

|

mellow, acoustic |

|

||||||||||||||||||||||

|

inspiring, world |

|

||||||||||||||||||||||

|

atmospheric, hip-hop |

|

||||||||||||||||||||||

Dynamics & Rhythm Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

angry, rock |

|

||||||||||||||||||||||

|

mellow, hip-hop |

|

||||||||||||||||||||||

|

powerful, country |

|

||||||||||||||||||||||

|

mellow, classical |

|

||||||||||||||||||||||

|

powerful, pop |

|

||||||||||||||||||||||

Melody & Dynamics Control |

|||||||||||||||||||||||

Reference |

Generated Music |

Text |

Feature Plots |

||||||||||||||||||||

|

happy, jazz |

|

||||||||||||||||||||||

|

sad, country |

|

||||||||||||||||||||||

|

angry, world |

|

||||||||||||||||||||||

|

happy, electronic |

|

||||||||||||||||||||||

|

powerful, classical |

|

||||||||||||||||||||||

Melody, Dynamics, & Rhythm Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

powerful, jazz |

|

||||||||||||||||||||||

|

mellow, world |

|

||||||||||||||||||||||

|

sad,r&b |

|

||||||||||||||||||||||

|

mellow, hip-hop |

|

||||||||||||||||||||||

|

nostalgic, r&b |

|

||||||||||||||||||||||

Partial Melody Control |

|||||||||||||||||||||||

Reference |

Generated Music |

Text |

Feature Plots |

||||||||||||||||||||

|

angry, jazz |

|

||||||||||||||||||||||

|

sexy, acoustic |

|

||||||||||||||||||||||

|

atrmospheric, hip-hop |

|

||||||||||||||||||||||

|

inspiring, country |

|

||||||||||||||||||||||

|

happy, world |

|

||||||||||||||||||||||

Partial Dynamics Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

sexy, jazz |

|

||||||||||||||||||||||

|

dramatic, r&b |

|

||||||||||||||||||||||

|

sad, jazz |

|

||||||||||||||||||||||

|

nostalgic, acoustic |

|

||||||||||||||||||||||

|

sad, r&b |

|

||||||||||||||||||||||

Partial Rhythm Control |

|||||||||||||||||||||||

Generated Music |

Text |

Feature Plots |

|||||||||||||||||||||

|

nostalgic, hip-hop |

|

||||||||||||||||||||||

|

sad, electronic |

|

||||||||||||||||||||||

|

angry, r&b |

|

||||||||||||||||||||||

|

sad, classical |

|

||||||||||||||||||||||

|

inspiring, acoustic |

|

||||||||||||||||||||||